SPECstorage™ Solution 2020_eda_blended Result

Copyright © 2016-2022 Standard Performance Evaluation Corporation

|

SPECstorage™ Solution 2020_eda_blended ResultCopyright © 2016-2022 Standard Performance Evaluation Corporation |

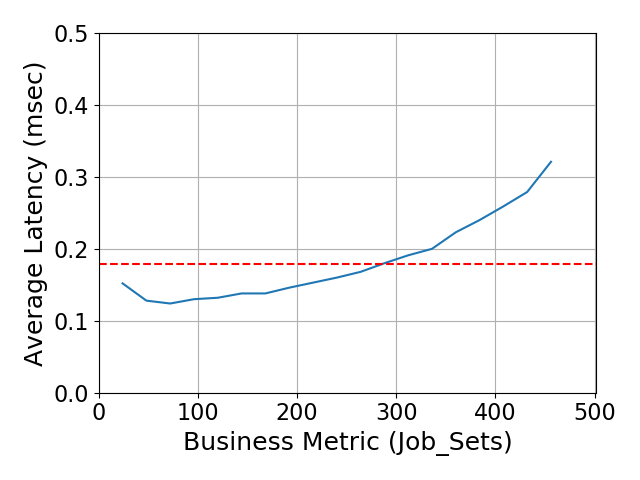

| Nettrix | SPECstorage Solution 2020_eda_blended = 456 Job_Sets |

|---|---|

| R620 G40 with 24 NVMe Storage Server | Overall Response Time = 0.18 msec |

|

|

| R620 G40 with 24 NVMe Storage Server | |

|---|---|

| Tested by | Nettrix | Hardware Available | May 2022 | Software Available | May 2022 | Date Tested | Jun 2022 | License Number | 6138 | Licensee Locations | Beijing,China |

The R620 G40 makes full use of computing, storage, and network resources in a limited space. It can flexibly allocate resources according to business needs to achieve excellent cost performance and energy consumption ratio. It is widely applicable to various industries such as the internet, finance, communication, and transportation to meet the needs of different business models.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 1 | Storage Server | Nettrix | R620 G40 | The R620 G40 with 24 NVMe Storage Server contains 2 x Intel(R) Xeon(R) Platinum 8380 CPU @ 2.30GHz, 32 x 32GB memory, and dual-socket MB, using NFSv4 protocol over a 100GbE network card. The R620 G40 server provides IO/s from 24 filesystems. The R620 G40 server uses a dual-socket processor, 1 x 1.6TB SAS 4.0 SSD, 24 x 7.68TB PCIe Gen4 NVMe SSD. R620 G40 is running RHEL8.3 on SAS 4.0 SSD using NFSv4 and 1 x 100GbE Ethernet network. |

| 2 | 1 | Client Server | Nettrix | R620 G40 | The R620 G40 with 24 NVMe Storage Server contains 2 x Intel(R) Xeon(R) Platinum 8380 CPU @ 2.30GHz, 32 x 32GB memory, and dual-socket MB, using NFSv4 protocol over a 100GbE network card. The R620 G40 server uses a dual-socket processor, 1 x 1.92TB SATA SSD for OS, and 6 x 1.6TB SAS 4.0 SSD for storage. The R620 G40 is running ESXi 7.0.2 OS on SATA SSD using NFSv4 and 1 x 100GbE Ethernet network. The R620 G40 server contains 8 virtual machines, each VM client has 16 vCPUs, 120GB memory, 300GB storage, and a 100GbE network port provided by the SR-IOV function. |

| 3 | 1 | Disk | PHISON | ESM1720-1920G | The operation system of client server installs in a SATA SSD (ESM1720-1920G). The capacity of SATA SSD disk is 1.92TB. |

| 4 | 7 | Disk | KIOXIA | KPM61VUG1T60 | There are a total of 7 SAS 4.0 SSD disks (KPM61VUG1T60), each with a capacity of 1.6TB. 1 x 1.6TB SAS 4.0 SSD disk is installed in storage server for OS. 6 x 1.6TB SAS 4.0 SSD disk are installed in the client server for storage. |

| 5 | 24 | Disk | Intel | SSDPF2KX076TZ | There are a total of 24 PCIe Gen4 NVMe SSD disks (SSDPF2KX076TZ) installed in storage server. The capacity of a PCIe Gen4 NVMe SSD disk is 7.68TB. |

| 6 | 2 | Ethernet Card | NVIDIA(Mellanox) | ConnectX-6 Dx 100GbE Dual-port QSFP56 | ConnectX-6 Dx EN adapter card, 100GbE, OCP3.0, With Host management, Dual-port QSFP56, No Crypto, Thumbscrew (Pull Tab) Bracket (Part Number MCX623436AN-CDAB). 1 of 2 is installed in the storage server, 2 of 2 is installed in the client server. |

| 7 | 2 | Ethernet Card | BITLAND | I350 1G RJ45 4-Ports PCIe NIC | The four 10/100/1000 copper ports with RJ45 connectors (Part Number EGI4-I350-US) are installed for management. 1 of 2 is installed in the storage server, 2 of 2 is installed in the client server. |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Storage Server | Linux | RHEL 8.3 | Storage Server Operating System. |

| 2 | Client Server | ESXi Server | ESXi 7.0.2 | The client OS version is VMware ESXi 7.0.2 build-17630552. |

| 3 | VM Clients | Linux | RHEL 8.3 | The client server is configured with about 8 VM clients that are running RHEL 8.3 OS. |

| Storage Server | Parameter Name | Value | Description |

|---|---|---|

| MTU | 9000 | Network Jumbo Frames. | Client Server | Parameter Name | Value | Description |

| Hyper-Threading [ALL] | Enabled | The value of Hyper-Threading [ALL] is set to enabled. The client server has double core numbers (160 cores). |

| SR-IOV | Enabled | Network card's SR-IOV function is set to enabled. | VM Clients | Parameter Name | Value | Description |

| MTU | 9000 | Network Jumbo Frames. |

The System Under Test has 100GbE Ethernet port set to MTU 9000.

| Client Server | Parameter Name | Value | Description |

|---|---|---|

| vers | 4 | NFS mount option set to version 4. |

| rsize, wsize | 1048576 | NFS mount option for the read and write buffer size. The read and write buffer size is changed in 8 VM clients. |

Setting the NFS mount option for the read and write buffer size to 1048576 bytes. 8 VM clients have a 100GbE Ethernet port provided by the SR-IOV function.

None.

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | 1 x 1.6T SAS 4.0 SSD disk is installed in storage server for OS. 6 x 1.6T SAS 4.0 SSD disk are installed in the client server for VM storage. | None | Yes | 7 |

| 2 | 1 x 1.92T SATA SSD disk is installed in the client server for VM OS. | None | Yes | 1 |

| 3 | 24 x 7.68TB PCIe Gen4 NVMe SSD disk for storage server IOs. | None | Yes | 24 |

| Number of Filesystems | 24 | Total Capacity | 184.32 TiB | Filesystem Type | xfs |

|---|

The filesystems are created with default options. Each filesystem is created by a 7.68TB PCIe Gen4 NVMe SSD disk. The 24 PCIe Gen4 NVMe SSD disks in storage server are directly connected to PCI with no controller.

The 184.32TiB capacity is equally allocated across all 24 filesystems.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 100GbE network | 2 | One port of dual-port 100GbE network card in the storage server is directly connected with one port of dual-port 100GbE network card in the client server |

None.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | N/A | N/A | N/A | N/A | N/A |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 2 | CPU | Storage Server | Intel(R) Xeon(R) Platinum 8380 CPU @ 2.30GHz 40C,Hyper-Threading [ALL] enabled (total 160 cores) | Storage Server |

| 2 | 2 | CPU | Client Server | Intel(R) Xeon(R) Platinum 8380 CPU @ 2.30GHz 40C, Hyper-Threading [ALL] enabled (total 160 cores) | Client Server |

The CPU model of the client server is the same as the storage server. The Hyper-Threading [ALL] is enabled. There are 8 VM clients under the client server, every VM client has 16 vCPU.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| System memory in the storage server | 1024 | 1 | V | 1024 |

| System memory in the client server | 1024 | 1 | V | 1024 | Grand Total Memory Gibibytes | 2048 |

None.

The storage server does not use a write cache to store data so that writes are committed to the disk immediately. The entire SUT is protected by redundant power supplies, both storage server and client server.

In client server, there are 8 VM clients. Each VM client contains 16 vCPUs, 120GB memory, 300GB disk, a 100GbE network port provided by the SR-IOV function, a management port provided by I350 1G RJ45 4-Ports PCIe NIC. In storage server, the 24 PCIe Gen4 NVMe SSD disks are directly connected to PCI with no controller. There are 24 filesystems, each NVMe disk creates a filesystem. There are using a 100GbE network port provided by ConnectX-6 Dx 100GbE Dual-port QSFP56 and a management port provided by I350 1G RJ45 4-Ports PCIe NIC.

None.

One 100GbE network port in the storage server is directly connected with one 100GbE network port in the client server. The 24 filesystems are created and shared from the storage server to 8 VM clients in the client server. The 24 filesystems (nvme0n1 nvme1n1 ... nvme23n1) are mounted to 8 VM clients (test1 test2 ... test8).

None.

None of the components used to perform the test were patched with Spectre or Meltdown patches (CVE-2017-5754, CVE-2017-5753, CVE-2017-5715).

Generated on Thu Jun 30 22:45:52 2022 by SpecReport

Copyright © 2016-2022 Standard Performance Evaluation Corporation