SPEC SFS®2014_vda Result

Copyright © 2016-2021 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_vda ResultCopyright © 2016-2021 Standard Performance Evaluation Corporation |

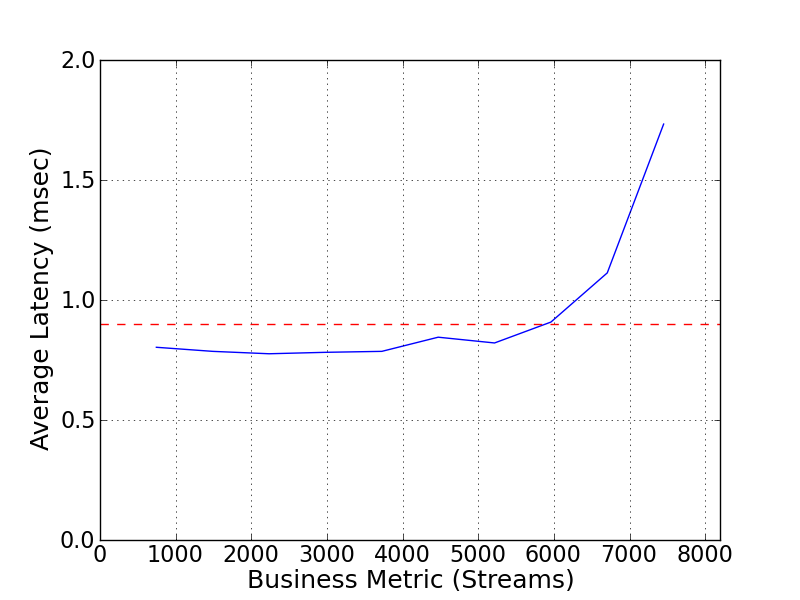

| Quantum Corporation | SPEC SFS2014_vda = 7450 Streams |

|---|---|

| Quantum StorNext 7.0.1 with F-Series Storage Nodes | Overall Response Time = 0.90 msec |

|

|

| Quantum StorNext 7.0.1 with F-Series Storage Nodes | |

|---|---|

| Tested by | Quantum Corporation | Hardware Available | January 2021 | Software Available | January 2021 | Date Tested | January 2021 | License Number | 4761 | Licensee Locations | Mendota Heights Minnesota |

StorNext File System (SNFS) is a software platform designed to manage massive amounts of data throughout its lifecycle, delivering the required balance of high performance, data protection and preservation, scalability, and cost. StorNext was designed specifically for large unstructured data sets, including video workloads, where low latency, predictable performance is required. SNFS is a scale-out parallel file system that is POSIX compliant and supports hundreds of petabytes and billions of files in a single namespace. Clients connect to the front-end using NAS protocols or directly to the back-end storage network with a dedicated client. Value-add data services enable integrated data protection and policy-based movement of files between multiple tiers of primary and secondary storage, including cloud. Storage and server-agnostic, SNFS may be run on customer-supplied hardware, or Quantum server and storage nodes.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 1 | Parallel File System | Quantum | StorNext V7.01 | High-Performance, parallel file system, scales across storage nodes, capacity and performance, multiple OS support |

| 2 | 10 | F1000 Storage Node | Quantum | F-Series NVMe Storage | Single Node, F-1000, each node has, 10 Micron 9300 MTFDHAL15T3TDP 15.36TB NVMe SSD, Single AMD EPYC Proecessor (7261 8 -Core Proc @2.5GHz), 64GB Memeory, 2 x Dual port Mellanox ConnectX-5 100GbE HBA (MCX518A-CCAT). 2 x 100GbE connections to the switch fabric, 1 per ethernet adaptor |

| 3 | 14 | Clients | Quantum | Xcellis Workflow Extender (XWE) Gen2 | Quantum XWE, each single 1U server has, 192GB memory, Dual CPU Intel(R) Xeon(R) Silver 4110 CPU @ 2.10GHz 8 Core, 2 x Dual Port 100GbE Mellanox MT28800 [ConnectX-5 Ex]. 2 x 100GbE connections to the switch fabric, 1 per ethernet adaptor |

| 4 | 1 | Metadata Controller | Quantum | Xcellis Workflow Director (XWD) Gen2 | Dual 1U server with HA (high availability), each server has, 64GB memory, Dual CPU Intel(R) Xeon(R) Silver 4110 CPU @ 2.10GHz 8 Core, 1 x Dual Port 100GbE Mellanox MT28800 [ConnectX-5 ] each ConnectX-5 card connects with a single DAC connection to switch infrastructure, only for administrative purposes. Note: Secondary node is also being used as "SPEC Prime". |

| 5 | 2 | 100GbE switch | Arista | Arista DCS-7060CX2-32S-F | 32 Port, 100GbE Ethernet switch |

| 6 | 1 | 1GbE switch | Netgear | Netgear ProSAFE GS752TP | 48 Port, 1GbE Ethernet switch |

| 7 | 1 | 1GbE switch | Dell | Dell PowerConnect 6248 | 48 Port, 1GbE Ethernet switch |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Storage Node | Operating System | Quantum CSP 1.2.0 | Node Cloud Storage Platform (CSP) |

| 2 | Client | Operating System | CentOS 7.7 | Operating system on load generator or clients |

| 3 | StorNext Metadata controllers | Operating System | CentOS 7.7 | Operating system on metadata controller |

| 4 | SPEC SFS Prime | Operating System | CentOS 7.7 | Operating system on SPEC SFS Prime |

| Component Name | Parameter Name | Value | Description |

|---|---|---|

| Tuning Param Name | Tuning Param Value | Tuning Param Description |

F1000 Nodes were all stock installation no other hardware alterations needed

| F1000 Storage Node | Parameter Name | Value | Description |

|---|---|---|

| Jumbo Frames | 9000 | set Jumbo frames to 9000 | Client | Parameter Name | Value | Description |

| Jumbo Frames | 9000 | set Jumbo frames to 9000 |

| nr_requests | 512 | maximum number of read and write requests that can be queued at one time |

| scheduler | noop | I/O scheduler in Linux |

Each client mount options: cachebufsize=128k,buffercachecap=16384,dircachesize=32m,buffercache_iods=32,bufferlowdirty=6144,bufferhighdirty=12288 Both scheduler and nr_requests set in /usr/cvfs/config/deviceparams on each client

None

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | Micron 9300 15.36TB NVMe SSD, 10 per storage node | RAID 10 | Yes | 100 |

| Number of Filesystems | 1 | Total Capacity | 431 TiB | Filesystem Type | StorNext |

|---|

Created a StorNext file system across all 10 nodes. Total 20 LUNS with 100 NVMe disks. A single Stripe group, stripebreadth=2.5MB, round-robin pattern. Metadata and User data combined into a single Stripe group and striped across all LUNS in the stripe group

Each F1000 contains 10 NVMe devices configured in RAID 10, then sliced into two LUNS per node.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 100GbE | 20 | Storage nodes used total of 20 ports of 100GbE or 2 per node |

| 2 | 100GbE | 28 | Load generators (Clients) used total 28 100GbE ports 2 per client |

| 3 | 100GbE | 1 | Xcellis Workflow Director Gen 2 used 1 port of 100GbE per metadata controller, for administration purposes only. |

| 4 | 100GbE | 1 | Xcellis Workflow Director Secondary metadata controller Gen 2/Prime, used 1 port of 100GbE for administration purposes only. |

Core switch configurations were two independent 100GbE subnets, each storage node and client had dual 100GbE connection, one per switch.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | Arista DCS-7060CX2-32S-F, total of 2 switches | 100GbE | 64 | 50 | 48 ports for storage and clients, 1 port for Primary metadata controller and 1 port for Prime/metadata controller |

| 2 | Netgear ProSAFE GS752TP | 1GbE | 48 | 47 | Management switch for metadata traffic, administrative access SUT, includes additional 6 ports for Xcellis Workflow Director |

| 3 | Dell PowerConnect 6248 | 1GbE | 48 | 41 | Management switch for metadata traffic, administrative access SUT |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 10 | CPU | Storage Node | AMD EPYC 7261 8-Core Processor, @ 2.5Ghz | Storage |

| 2 | 28 | CPU | Load Generator, Client | Intel(R) Xeon(R) Silver 4110 CPU 8 Core @ 2.10GHz | StorNext Client |

| 3 | 2 | CPU | Prime, Client | Intel(R) Xeon(R) Silver 4110 CPU 8 Core @ 2.10GHz | SPEC2014 Prime |

None

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| F1000 Storage memory | 64 | 10 | V | 640 |

| Client memory | 192 | 14 | V | 2688 |

| Prime memory | 64 | 1 | V | 64 | Grand Total Memory Gibibytes | 3392 |

Storage nodes have 64GB of memory for a total of 640GB. Clients have 192GB of memory each for a total of 2,688GB. The Prime has 64GB of memory

The F1000 storage node does not use write cache to temporarily store data in flight, writes are therefore committed to disk immediately. All Data is protected in the file system by a RAID 10 storage configuration including metadata. The entire SUT is protected with redundant power supplies, both storage nodes and clients. F1000 and clients have two NVMe system devices in a 1+1 configuration for OS redundency. Metadata servers are configured in a high availability state, this ensures file system access in the event of a metadata server failure.

The solution is a standardized configuration by Quantum with Xcellis workflow directors managing metadata for the file system. F1000 storage nodes are off the shelf nodes designed for high perpormance streaming media in addition to high IOPS for very randomized workflows. The file system was configured, as per the "Filesystem Creation Notes" listed above. The purpose of this is to take into account a mixed workflow of random and sequential processes, including combining metadata striped across the file system.

None

The entire SUT is connected per the SUT diagram. Each of the 14 clients is direct connected using iSER/RDMA. No special tuning parameters were used in tuning the connections. One file system was created and shared as StorNext file system.

None

None

Generated on Thu Feb 18 15:16:38 2021 by SpecReport

Copyright © 2016-2021 Standard Performance Evaluation Corporation