SPEC SFS®2014_swbuild Result

Copyright © 2016-2019 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_swbuild ResultCopyright © 2016-2019 Standard Performance Evaluation Corporation |

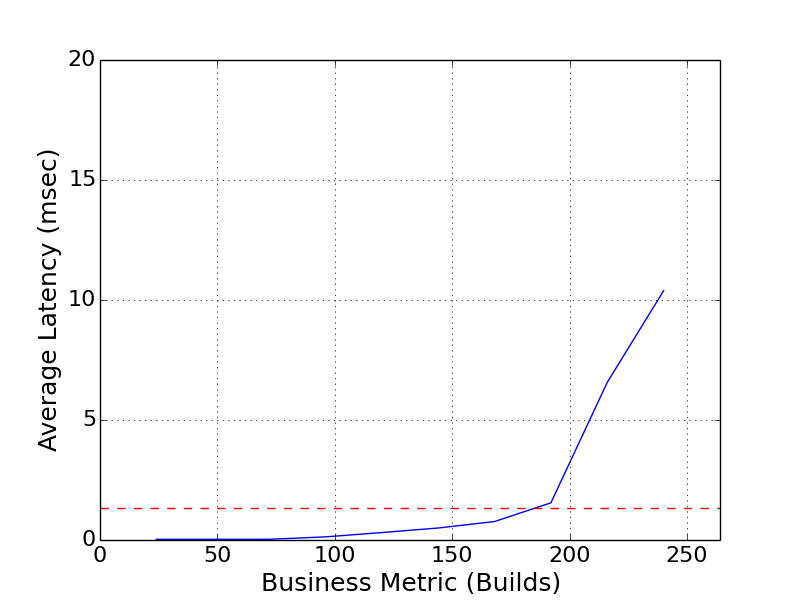

| IBM Corporation | SPEC SFS2014_swbuild = 240 Builds |

|---|---|

| IBM Spectrum Scale 4.2.1 with Cisco UCS and IBM FlashSystem 900 | Overall Response Time = 1.32 msec |

|

|

| IBM Spectrum Scale 4.2.1 with Cisco UCS and IBM FlashSystem 900 | |

|---|---|

| Tested by | IBM Corporation | Hardware Available | February 2016 | Software Available | September 2016 | Date Tested | November 2016 | License Number | 11 | Licensee Locations | Raleigh, NC USA |

IBM Spectrum Scale helps solve the challenge of explosive growth of

unstructured data against a flat IT budget.

Spectrum Scale provides

unified file and object software-defined storage for high performance, large

scale workloads on-premises or in the cloud. Spectrum Scale includes the

protocols, services and performance required by many industries, Technical

Computing, Big Data, HDFS and business critical content repositories. IBM

Spectrum Scale provides world-class storage management with extreme

scalability, flash accelerated performance, and automatic policy-based storage

tiering from flash through disk to tape, reducing storage costs up to 90% while

improving security and management efficiency in cloud, big data & analytics

environments.

Cisco UCS is the first truly unified data center platform

that combines industry- standard, x86-architecture servers with networking and

storage access into a single system. The system is intelligent infrastructure

that is automatically configured through integrated, model-based management to

simplify and accelerate deployment of all kinds of applications. The system's

x86-architecture rack and blade servers are powered exclusively by Intel(R)

Xeon(R) processors and enhanced with Cisco innovations. These innovations

include the capability to abstract and automatically configure the server

state, built-in virtual interface cards (VICs), and leading memory capacity.

Cisco's enterprise-class servers deliver world-record performance to power

mission-critical workloads. , Cisco UCS is integrated with a standards-based,

high-bandwidth, low-latency, virtualization-aware 10-Gbps unified fabric, with

a new generation of Cisco UCS fabric enabling an update to 40 Gbps.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 1 | Blade Server Chassis | Cisco | UCS 5108 | The Cisco UCS 5108 Blade Server Chassis features flexible bay configurations for blade servers. It can support up to eight half-width blades, up to four full-width blades, or up to two full-width double-height blades in a compact 6-rack-unit (6RU) form factor. |

| 2 | 4 | Blade Server, Spectrum Scale Node | Cisco | UCS B200 M4 | UCS B200 M4 Blade Servers, each with: 2X Intel Xeon processors E5-2680 v3 (24 core per node) 256 GB of memory |

| 3 | 2 | Fabric Interconnect | Cisco | UCS 6332-16UP | Cisco UCS 6300 Series Fabric Interconnects support line-rate, lossless 40 Gigabit Ethernet and FCoE connectivity. |

| 4 | 2 | Fabric Extender | Cisco | UCS 2304 | Cisco UCS 2300 Series Fabric Extenders can support up to four 40-Gbps unified fabric uplinks per fabric extender connecting Fabric Interconnect. |

| 5 | 4 | Virtual Interface Card | Cisco | UCS VIC 1340 | The Cisco UCS Virtual Interface Card (VIC) 1340 is a 2-port, 40 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE)-capable modular LAN on motherboard (mLOM) mezzanine adapter. |

| 6 | 2 | FlashSystem | IBM | 9840-AE2 | Each FlashSystem was configured with 12 2.9TB IBM MicroLatency modules (feature code AF24). |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Spectrum Scale Nodes | Spectrum Scale File System | 4.2.1.1 | The Spectrum Scale File System is a distributed file system that runs on the Cisco UCS B200 M4 servers to form a cluster. The cluster allows for the creation and management of single namespace file systems. |

| 2 | Spectrum Scale Nodes | Operating System | Red Hat Enterprise Linux 7.2 for x86_64 | The operating system on the Spectrum Scale nodes was 64-bit Red Hat Enterprise Linux version 7.2. |

| 3 | FlashSystem 900 | Storage System | 1.4.4.2 | The FlashSystem software covers all aspects of administering, configuring, and monitoring the FlashSystem 900. |

| Spectrum Scale Nodes | Parameter Name | Value | Description |

|---|---|---|

| numaMemoryInterleave | yes | Enables memory interleaving on NUMA based systems. |

| multipath device: path_selector | queue-length 0 | Determines which algorithm to use when selecting paths. With this value the path with the least amount of outstanding I/O is selected. |

| multipath device: path_grouping_policy | multibus | Determines which grouping policy to use for a set of paths. The multibus value causes all paths to be placed in one priority group. |

The first configuration parameter was set using the "mmchconfig" command on one of the nodes in the cluster. The multipath device parameters were set in the multipath.conf file on each node. A template multipath.conf file for the FlashSystem can be found in the "Implementing IBM FlashSystem 900" Redbook, published by IBM.

| Spectrum Scale Nodes | Parameter Name | Value | Description |

|---|---|---|

| ignorePrefetchLUNCount | yes | Specifies that only maxMBpS and not the number of LUNs should be used to dynamically allocate prefetch threads. |

| maxblocksize | 1M | Specifies the maximum file system block size. |

| maxMBpS | 10000 | Specifies an estimate of how many megabytes of data can be transferred per second into or out of a single node. |

| maxStatCache | 0 | Specifies the number of inodes to keep in the stat cache. |

| pagepoolMaxPhysMemPct | 90 | Percentage of physical memory that can be assigned to the page pool |

| scatterBufferSize | 256K | Specifies the size of the scatter buffers. |

| workerThreads | 1024 | Controls the maximum number of concurrent file operations at any one instant, as well as the degree of concurrency for flushing dirty data and metadata in the background and for prefetching data and metadata. |

| maxFilesToCache | 11M | Specifies the number of inodes to cache for recently used files that have been closed. |

| pagepool | 96G | Specifies the size of the cache on each node. |

| nsdBufSpace | 70 | Sets the percentage of the pagepool that is used for NSD (Network Shared Disk) buffers. |

| nsdMaxWorkerThreads | 3072 | Sets the maximum number of threads to use for block level I/O on the NSDs. |

| nsdMinWorkerThreads | 3072 | Sets the minimum number of threads to use for block level I/O on the NSDs. |

| nsdMultiQueue | 64 | Specifies the maximum number of queues to use for NSD I/O. |

| nsdThreadsPerDisk | 3 | Specifies the maximum number of threads to use per NSD. |

| nsdThreadsPerQueue | 48 | Specifies the maximum number of threads to use per NSD I/O queue. |

| nsdSmallThreadRatio | 1 | Specifies the ratio of small thread queues to small thread queues. |

The configuration parameters were set using the "mmchconfig" command on one of the nodes in the cluster. Both the nodes used mostly default tuning parameters. A discussion of Spectrum Scale tuning can be found in the official documentation for the mmchconfig command and on the IBM developerWorks wiki.

There were no opaque services in use.

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | FlashSystem 900 volumes, 700 GiB each, used as Network Shared Drives for Spectrum Scale. | RAID-5 | Yes | 64 |

| 2 | FlashSystem 900 volumes, 100 GiB each, used to store the operating system of each Spectrum Scale node. | RAID-5 | Yes | 4 |

| Number of Filesystems | 1 | Total Capacity | 44800 GiB | Filesystem Type | Spectrum Scale File System |

|---|

A single Spectrum Scale file system was created with a 128 MiB block size for

data and metadata, 4 KiB inode size, and a 64 MiB log size. The file system was

spread across all of the Network Shared Disks (NSDs). Each client node mounted

the file system. The file system parameters reflect values that might be used

in a typical small file, metadata intensive environment.

The nodes each

had an ext4 file system that hosted the operating system.

Each of the FlashSystem 900 systems had 12 2.9 TiB flash modules. On each

system one module was used as a spare and the remaining 11 modules were

configured into a RAID-5 array. At that point volumes were created and mapped

to the hosts, which were the Spectrum Scale nodes.

There were two sets

of volumes used in the benchmark. On one of the FlashSystem 900 systems 4 100

GiB volumes were created, and each was mapped to a single host to be used for

the node operating system. On each of the 2 FlashSystem 900 systems 32 700 GiB

volumes were created, and each volume was mapped to all 4 hosts. These volumes

were configured as NSDs by Spectrum Scale and used as storage for the Spectrum

Scale file system.

The cluster used a single-tier architecture. The

Spectrum Scale nodes performed both file and block level operations. Each node

had access to all of the NSDs, so any file operation on a node was translated

to a block operation and serviced on the same node.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 40 GbE cluster network | 4 | Each node connects to a 40 GbE administration network with MTU=1500 |

| 2 | 16 Gbps SAN | 24 | There were 16 total connections from storage and 8 total connections from servers. |

Each of the Cisco UCS B200 M4 blade servers comes with a Cisco UCS Virtual Interface Card 1340. The two port card supports 40 GbE and FCoE. To the operating system on the blade servers the card appears as a NIC for Ethernet and as an HBA for fibre channel connectivity. Physically the card connects to the UCS 2304 fabric extenders via internal chassis connections. The eight total ports from the fabric extenders connect to the UCS 6332-16UP fabric interconnects. The two fabric interconnects function as both 16 Gbps FC switches and as 40 Gbps Ethernet switches.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | Cisco UCS 6332-16UP #1 | 40 GbE and 16 Gbps FC | 40 | 12 | The default configuration was used on the switch. |

| 2 | Cisco UCS 6332-16UP #2 | 40 GbE and 16 Gbps FC | 40 | 12 | The default configuration was used on the switch. |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 8 | CPU | Spectrum Scale client nodes | Intel Xeon CPU E5-2680 v3 @ 2.50GHz 12-core | Spectrum Scale nodes, load generator, device drivers |

Each of the Spectrum Scale client nodes had 2 physical processors. Each processor had 12 cores with two threads per core.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| Spectrum Scale node system memory | 256 | 4 | V | 1024 | Grand Total Memory Gibibytes | 1024 |

Spectrum Scale reserves a portion of the physical memory in each node for file data and metadata caching. A portion of the memory is also reserved for buffers used for node to node communication.

All of the storage used by the benchmark was non-volatile flash storage. Modified writes were not acknowledged as complete until the data was written to the FlashSystem 900s. Each FlashSystem has two battery modules that in the case of a power failure allow the system to remain powered long enough for all of the data in the system's write cache to be committed to the flash modules.

The solution under test was a Spectrum Scale cluster optimized for small file, metadata intensive environments. The Spectrum Scale nodes were also the load generators for the benchmark. The benchmark was executed from one of the nodes.

The WARMUP_TIME for the benchmark was 900 seconds.

The 4 Spectrum Scale nodes were the load generators for the benchmark. Each load generator had access to the single namespace Spectrum Scale file system. The benchmark accessed a single mount point on each load generator. In turn each of mount points corresponded to a single shared base directory in the file system. The nodes process the file operations, and the data requests to and from the backend storage were serviced locally on each node. Block access to each LUN on the nodes was controlled via Linux multipath.

IBM, IBM Spectrum Scale, IBM FlashSystem, and MicroLatency are trademarks of

International Business Machines Corp., registered in many jurisdictions

worldwide.

Cisco UCS is a trademark of Cisco in the USA and certain

other countries.

Intel and Xeon are trademarks of the Intel Corporation

in the U.S. and/or other countries.

None

Generated on Wed Mar 13 16:50:35 2019 by SpecReport

Copyright © 2016-2019 Standard Performance Evaluation Corporation