SPECsfs2008_nfs.v3 Result

|

Huawei

|

:

|

Huawei OceanStor 9000 Storage System, 100 nodes

|

|

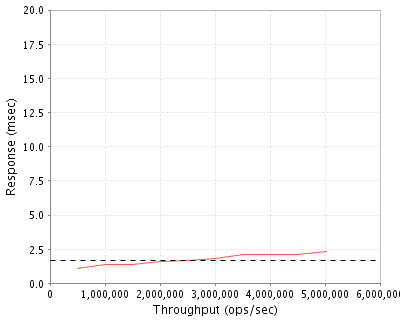

SPECsfs2008_nfs.v3

|

=

|

5030264 Ops/Sec (Overall Response Time = 1.65 msec)

|

Performance

Throughput

(ops/sec)

|

Response

(msec)

|

|

500323

|

1.1

|

|

1002290

|

1.4

|

|

1503680

|

1.4

|

|

2005790

|

1.6

|

|

2511513

|

1.7

|

|

3011686

|

1.8

|

|

3516148

|

2.1

|

|

4028616

|

2.1

|

|

4506490

|

2.1

|

|

5030264

|

2.3

|

|

|

Product and Test Information

|

Tested By

|

Huawei

|

|

Product Name

|

Huawei OceanStor 9000 Storage System, 100 nodes

|

|

Hardware Available

|

July 2013

|

|

Software Available

|

October 2013

|

|

Date Tested

|

November 2013

|

|

SFS License Number

|

3175

|

|

Licensee Locations

|

Chengdu Research Institute U9

Huawei Chengdu Base, No.1899, Xiyuan Avenue, Hi-tech Western District, Chengdu, Sichuan Province,

CHINA

|

The Huawei OceanStor 9000 is a Big Data storage product. Applicable industries include broadcasting media, satellite mapping, genetic research, energy exploration, scientific research and education. With a distributed architecture, the OceanStor 9000 supports seamless scale-out from 3 to a maximum of 288 nodes. All nodes are symmetrically arranged in the OceanStor 9000.

The OceanStor 9000 supports on-demand hitless scale-out, which increases capacity and performance linearly. The OceanStor 9000 offers up to 40 PB in a single file system. Such massive scalability helps maximally reduce your CAPEX and TCO while enabling the OceanStor 9000 to optimally adapt to your changing storage requirements.

Configuration Bill of Materials

|

Item No

|

Qty

|

Type

|

Vendor

|

Model/Name

|

Description

|

|

1

|

100

|

Storage Node

|

Huawei

|

OceanStor 9000 P Node

|

OceanStor 9000 high performance node(P Node), including slots for 25 2.5 inch storage devices.

|

|

2

|

600

|

200GB SSD

|

Huawei

|

Disk drive

|

HSSD 200GB SAS disk solid state Drive(2.5").

|

|

3

|

1140

|

900GB SAS Disk

|

Huawei

|

Disk drive

|

900GB 10K RPM SAS Disk Drive(2.5").

|

|

4

|

760

|

600GB SAS Disk

|

Huawei

|

Disk drive

|

600GB 10K RPM SAS Disk Drive(2.5").

Note: the number of 600GB and 900GB drives was determined by availability of resources in the performance lab.

|

|

5

|

1

|

10GE network switch

|

Huawei

|

Cloud Engine 12800 switch

|

Huawei Cloud Engine switch with 400 10GE ports

|

Server Software

|

OS Name and Version

|

Huawei OceanStor 9000 V100R001C00

|

|

Other Software

|

Huawei OceanStor Integrated Storage Management-N V100R001C00

|

|

Filesystem Software

|

WushanFS

|

Server Tuning

|

Name

|

Value

|

Description

|

|

nfsd number

|

64

|

The number of NFS threads has been increased to 64 by editing /proc/fs/nfsd/work_threads.

|

|

NFS_MOUNTD_THREAD_NUM

|

8

|

The maximum number of concurrent mount threads the server can handle simultaneously.

|

|

tcp_mem

|

94371840

|

Socket buffer sizes for the 10GE ports.

|

|

tcp_rmem

|

94371840

|

Socket receive buffer sizes for the 10GE ports.

|

|

tcp_wmem

|

47185920

|

Socket send buffer sizes for the 10GE ports.

|

Server Tuning Notes

None

Disks and Filesystems

|

Description

|

Number of Disks

|

Usable Size

|

|

200GB SSDs

|

600

|

109.0 TB

|

|

900GB SAS drives

|

1140

|

926.2 TB

|

|

600GB SAS drives

|

760

|

412.7 TB

|

|

Total

|

2500

|

1447.9 TB

|

|

Number of Filesystems

|

1

|

|

Total Exported Capacity

|

1482640 GB

|

|

Filesystem Type

|

WushanFS

|

|

Filesystem Creation Options

|

Default

|

|

Filesystem Config

|

Reed-Solomon (8 + 1)

|

|

Fileset Size

|

586008.3 GB

|

The system contains 100 P Nodes. 40 of the nodes contain 6 200GB SSDs and 19 600GB SAS disks each. The other 60 nodes contain 6 200GB SSDs and 19 900GB SAS disks each (we were forced into this configuration by availability of drives in the performance lab).

All 100 nodes are set up in a single filesystem that includes both the SSDs and the SAS disks. The WiseTier software dynamically recognizes hot files and migrates them between the SSDs and the SAS disks; hotspots are stored on the SSDs and colder files on the SAS disks. File data for a given file is striped across 9 P Nodes.

Reed-Solomon erasure coding (8 + 1) is the default protection level for an OceanStor 9000 100-node system. +2 and +3 protection levels are also available.

Network Configuration

|

Item No

|

Network Type

|

Number of Ports Used

|

Notes

|

|

1

|

10 Gigabit Ethernet

|

200

|

There are 2 10GE network ports used per OceanStor 9000 P Node for internal connection, with MTU size 9000.

|

|

2

|

10 Gigabit Ethernet

|

100

|

There is 1 10GE network port used per OceanStor 9000 P Node for external connection, with MTU size 1500.

|

Network Configuration Notes

All network interfaces were connected to the 10GE network switch (Huawei CE12800).

Benchmark Network

For each client, 4 10GE interfaces are bonded to one port, with MTU size 1500.

Processing Elements

|

Item No

|

Qty

|

Type

|

Description

|

Processing Function

|

|

1

|

200

|

CPU

|

Intel Six-Core Xeon Processor E5-2420, 1.9GHz, 15MB L3 cache

|

WushanFS, NFS, TCP/IP

|

Processing Element Notes

Each of the OceanStor 9000 P Node has 2 physical processors, and each processor has 6 physical cores. Hyperthreading is enabled, yielding 24 hardware threads per node.

Memory

|

Description

|

Size in GB

|

Number of Instances

|

Total GB

|

Nonvolatile

|

|

OceanStor 9000 P Node System Memory

|

96

|

100

|

9600

|

V

|

|

NVDIMM Non-volatile Memory on PCIe adapter, used to provide stable storage for writes not yet committed to disk.

|

2

|

100

|

200

|

NV

|

|

Grand Total Memory Gigabytes

|

|

|

9800

|

|

Memory Notes

Each OceanStor 9000 P Node has main memory for the operating system and filesystem data. A separate, NVDIMM module is used to provide stable storage for writes not yet committed to disk.

Stable Storage

The NVDIMM in each OceanStor 9000 P Node stores and journals writes until they can be flushed to the disks. Each NVDIMM has a capacitor and 2GB flash.

Data in the NVDIMM is flushed to flash using capacitor power (up to 25 seconds) when node power fails.

Writes are ACKed to the client once they have been written and journaled in the NVDIMM.

When the system comes back up after an outage, the journal in the NVDIMM flash is replayed.

In the event of capacitor failure, data is no longer journaled in the NVDIMM, but is written to disks on a write-through basis.

System Under Test Configuration Notes

The system under test consists of 100 2U OceanStor 9000 P Nodes. Each P Node has 3 10GE ports connected to a 10GE switch. One port is used for external connections; the other two are used internally.

Other System Notes

Test Environment Bill of Materials

|

Item No

|

Qty

|

Vendor

|

Model/Name

|

Description

|

|

1

|

20

|

Huawei

|

Tecal RH2285

|

48GB RAM Server running SUSE Linux Enterprise Server 11 SP1

|

Load Generators

|

LG Type Name

|

Tecal RH2285

|

|

BOM Item #

|

1

|

|

Processor Name

|

Intel(R) Xeon(R) E5620

|

|

Processor Speed

|

2.4 GHz

|

|

Number of Processors (chips)

|

2

|

|

Number of Cores/Chip

|

4

|

|

Memory Size

|

48 GB

|

|

Operating System

|

SUSE Linux Enterprise Server 11 SP1

|

|

Network Type

|

10 Gigabit Ethernet

|

Load Generator (LG) Configuration

Benchmark Parameters

|

Network Attached Storage Type

|

NFS V3

|

|

Number of Load Generators

|

20

|

|

Number of Processes per LG

|

900

|

|

Biod Max Read Setting

|

2

|

|

Biod Max Write Setting

|

2

|

|

Block Size

|

AUTO

|

Testbed Configuration

|

LG No

|

LG Type

|

Network

|

Target Filesystems

|

Notes

|

|

1..20

|

LG1

|

10 Gigabit Ethernet

|

Single filesystem named /fs

|

N/A

|

Load Generator Configuration Notes

All clients are connected to the single filesystem /fs through all OceanStor 9000 P Nodes.

Uniform Access Rule Compliance

Each load-generating client hosts 900 processes. The assignment of processes to network interfaces is done such that they are evenly divided across all network paths to the OceanStor 9000 P Nodes.

Other Notes

Config Diagrams

Generated on Mon Dec 09 16:29:32 2013 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 29-Nov-2013