Preparing Workload Images¶

SPEC Cloud IaaS 2016 Benchmark has two workloads, namely, YCSB and K-Means.

[For development only]

If your company is a member of SPEC, you can download the workload images for Cassandra/YCSB and Hadoop/KMeans from SPEC Miami site. These images are not available to benchmark licensees or general public.

CentOS:: Cassandra/YCSB:

image:

https://spec.cs.miami.edu/private/osg/cloud/images/latest/cb_speccloud_cassandra_2111_centos.qcow2

md5sum:

https://spec.cs.miami.edu/private/osg/cloud/images/latest/cb_speccloud_cassandra_2111_centos.qcow2.md5

- K-Means::

Ubuntu:: Cassandra/YCSB:

image:

https://spec.cs.miami.edu/private/osg/cloud/images/latest/cb_speccloud_cassandra_2111_ubuntu1404.qcow2

md5sum:

https://spec.cs.miami.edu/private/osg/cloud/images/latest/cb_speccloud_cassandra_2111_ubuntu1404.qcow2.md5

- K-Means::

image: https://spec.cs.miami.edu/private/osg/cloud/images/latest/cb_speccloud_hadoop_271_ubuntu1404.qcow2

Timezone¶

It is highly recommended to configure UTC timezone on all instance images and ensure that the timezone of instances and the benchmark harness machine matches. The configuration of a timezone can be specific to a *Nix distribution.

Tips for Preparing Workload Images¶

It is recommended that Cassandra and Hadoop be setup in different images. The following process may be followed:

- Create a common image.

- Create an instance from common image and install Cassandra and YCSB. Take a snapshot of this instance.

- Create another instance from common image and install Hadoop and KMeans. Take a snapshot of this instance.

(x86_64/Ubuntu/Trusty/QCOW2) - Common workload image¶

CBTOOL github wiki provides instructions on how to prepare a workload image for your cloud:

https://github.com/ibmcb/cbtool/wiki/HOWTO:-Preparing-a-VM-to-be-used-with-CBTOOL-on-a-real-cloud

The instructions below can be used to prepare a base qcow2 image for Cassandra or KMeans workloads.

- Download an Ubuntu trust image:

Upload the image in your cloud. The instructions for uploading the image are specific to each cloud.

Start with an Ubuntu Trust VM:

ssh -i YOURKEY.PEM ubuntu\@yourVMIP

Add IP address and hostname of your VM in /etc/hosts file:

vi /etc/hosts IPADDR HOSTNAME

Update with latest packages and git:

sudo apt-get update sudo apt-get install git -y

Create a cbuser Linux account:

sudo adduser cbuser Set password as cbuser

Give it password-less access:

sudo visudo Add the following line. cbuser ALL=(ALL:ALL) NOPASSWD: ALL

Switch to this user:

su cbuser (password should be cbuser) cd ~/

unzip the kit and move osgcloud into home directory:

cd ~/ unzip spec_cloud_iaas*.zip mv spec_cloud_iaas*/osgcloud ~/

Add the key from CBTOOL into the authorized_keys file of this VM.

To avoid permissions vagaries for .ssh directory, generate SSH key. The newly created public and private keys will be discarded when they are overwritten with CBTOOL keys:

ssh-keygen [press ENTER for all options]The above command will create the following directory and add id_rsa and id_rsa.pub keys into it. Now copy keys from CBTOOL machine which are present in the following directories on CBTOOL machine:

cd ~/osgcloud/cbtool/credentials ls cbtool_rsa cbtool_rsa.pubCopy these keys into .ssh directory of this VM:

echo CBTOOLRSA > ~/.ssh/id_rsa echo CBTOOLRSA.PUB > ~/.ssh/id_rsa.pub echo CBTOOLRSA.PUB >> ~/.ssh/authorized_keysAdjust the permissions:

chmod 400 ~/.ssh/id_rsa chmod 400 ~/.ssh/id_rsa.pub chmod 400 ~/.ssh/authorized_keys

Install ntp package:

sudo apt-get install ntp -y

Test ssh connectivity to your VM from benchmark harness machine:

ssh -i /home/ubuntu/osgcloud/cbtool/credentials/cbtool_rsa cbuser@YOURVMIP

If this works, cloudbench can do a key-based ssh in this VM.

Search for UseDNS in /etc/ssh/sshd_config. If it does not exist, insert the following line in /etc/ssh/sshd_config:

vi /etc/ssh/sshd_config UseDNS no

Check /home/cbuser/.ssh/config

If it containers a line:

-e UserKnownHostsFile=/dev/null

Remove the “-e ” and save the file.

Install cloud-init. Not all clouds may support cloud-init. In this case, skip step 14 and 15.:

sudo apt-get install cloud-init

Configure cloud-init.

Since the instructions use cbuser as the Linux user, it is important that keys are injected in this user by cloud-init.:

Add the following in ``/etc/cloud/cloud.cfg``

runcmd:

- [ sh, -c, cp -rf /home/ubuntu/.ssh/authorized_keys /home/cbuser/.ssh/authorized_keys ]

Then:

sudo dpkg-reconfigure cloud-init

The cloud-init may not always work with your cloud. Setup password-based ssh for the VM:

vi /etc/ssh/sshd_config PasswordAuthentication yes

18. Install null workload. This will install all CBTOOL dependencies for the workload image. Then you can install the workloads by following platform specific instructions:

cd /home/cbuser/osgcloud/

cbtool/install -r workload --wks nullworkload

If there are any errors, rerun the command until it exits without any errors. A successful output should return the following:

“All dependencies are in place”

19. Remove the hostname added in /etc/hosts and take a snapshot of this VM. The snapshot instructions vary per cloud. You can then use this image to prepare workload images.

(x86_64/Ubuntu/Trusty/QCOW2) - Cassandra and YCSB¶

These instructions assume that you are starting with the common workload image created earlier.

Cassandra and YCSB are installed on the same machine.

Installing Cassandra¶

The Cassandra debian package is present in the kit. Install it as follows:

~/osgcloud/workloads/cassandra/

sudo apt-get install openjdk-7-jdk -y

sudo dpkg -i workloads/cassandra/cassandra_2.1.11_all.deb

Alternatively, install Cassandra 2.1.11 from Cassandra repository (these instructions are taken from Cassandra Apache ):

vi /etc/apt/sources.list.d/cassandra.sources.list

deb http://www.apache.org/dist/cassandra/debian 21x main

deb-src http://www.apache.org/dist/cassandra/debian 21x main

If you run apt-get update now, you will see an error similar to this:

GPG error: http://www.apache.org unstable Release: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY F758CE318D77295D

This simply means you need to add the PUBLIC_KEY. You do that like this:

gpg --keyserver pgp.mit.edu --recv-keys F758CE318D77295D

gpg --export --armor F758CE318D77295D | sudo apt-key add -

Starting with the 0.7.5 debian package, you will also need to add public key 2B5C1B00 using the same commands as above:

gpg --keyserver pgp.mit.edu --recv-keys 2B5C1B00

gpg --export --armor 2B5C1B00 | sudo apt-key add -

You will also need to add public key 0353B12C using the same commands as above:

gpg --keyserver pgp.mit.edu --recv-keys 0353B12C

gpg --export --armor 0353B12C | sudo apt-key add -

Then you may install Cassandra by doing:

sudo apt-get update

sudo apt-get install cassandra -y

Check Cassandra version, make sure that it is 2.1.11:

sudo dpkg -l | grep cassandra

Installing YCSB¶

YCSB ships as part of the kit. It is present in the following directory in the kit:

~/workloads/ycsb/ycsb-0.4.0.tar.gz

Uncompress the file:

tar -xzvf ~/workloads/ycsb/ycsb-0.4.0.tar.gz

Move to home directory and rename:

mv ycsb-0.4.0 ~/YCSB

Capture the VM using your cloud capture tools (snapshot etc)

(x86_64/Ubuntu/Trusty/QCOW2) - KMeans and Hadoop¶

The instructions below specify how to set up Hadoop (2.7.1) and how to install HiBench and Mahout that ship with the kit.

Acknowledgements:

http://www.bogotobogo.com/Hadoop/BigData_hadoop_Install_on_ubuntu_single_node_cluster.php

Create a VM from the common image prepared earlier.

ssh into your VM:

ssh -i YOURKEYPEM cbuser@YOURVMIP

Make use that YOURKEYPEM will be common for both YCSB and K-Means VM images.

Install Java and check Java version:

sudo apt-get install openjdk-7-jdk -y java -version java version "1.7.0_75" OpenJDK Runtime Environment (IcedTea 2.5.4) (7u75-2.5.4-1~trusty1) OpenJDK 64-Bit Server VM (build 24.75-b04, mixed mode)Create hadoop group:

sudo addgroup hadoop

Add cbuser to hadoop group:

sudo usermod -a -G hadoop cbuser

Test ssh to localhost is working without password:

ssh localhost

If this does not work, add your private key to /home/cbuser/.ssh/ . The name of key file is id_rsa

Get Hadoop 2.7.1 from kit or download from Apache website:

cd /home/cbuser From kit cp workloads/hadoop/hadoop-2.7.1.tar.gz . From website wget http://mirror.symnds.com/software/Apache/hadoop/common/hadoop-2.7.1/hadoop-2.7.1.tar.gz tar -xzvf hadoop-2.7.1.tar.gz

Move hadoop to /usr/local/hadoop directory:

sudo mv hadoop-2.7.1 /usr/local/hadoop sudo chown -R cbuser:hadoop /usr/local/hadoop ls -al /usr/local/hadoop total 60 drwxr-xr-x 9 cbuser hadoop 4096 Nov 13 21:20 . drwxr-xr-x 11 root root 4096 Feb 9 00:34 .. drwxr-xr-x 2 cbuser hadoop 4096 Nov 13 21:20 bin drwxr-xr-x 3 cbuser hadoop 4096 Nov 13 21:20 etc drwxr-xr-x 2 cbuser hadoop 4096 Nov 13 21:20 include drwxr-xr-x 3 cbuser hadoop 4096 Nov 13 21:20 lib drwxr-xr-x 2 cbuser hadoop 4096 Nov 13 21:20 libexec -rw-r--r-- 1 cbuser hadoop 15429 Nov 13 21:20 LICENSE.txt -rw-r--r-- 1 cbuser hadoop 101 Nov 13 21:20 NOTICE.txt -rw-r--r-- 1 cbuser hadoop 1366 Nov 13 21:20 README.txt drwxr-xr-x 2 cbuser hadoop 4096 Nov 13 21:20 sbin drwxr-xr-x 4 cbuser hadoop 4096 Nov 13 21:20 share

Remove the downloaded Hadoop image:

rm -f hadoop-2.7.1.tar.gz

Setup configuration¶

Instructions for setup:

- Add environment variables in ~/.bashrc

Before editing the .bashrc file in our home directory, we need to find the path where Java has been installed to set the JAVA_HOME environment variable using the following command:

update-alternatives --config java There is only one alternative in link group java (providing /usr/bin/java): /usr/lib/jvm/java-7-openjdk-amd64/jre/bin/javaNow we can append the following to the end of ~/.bashrc:

export JAVA_HOME=/usr/lib/jvm/java-7-openjdk-amd64 export HADOOP_INSTALL=/usr/local/hadoop export PATH=$PATH:$HADOOP_INSTALL/bin export PATH=$PATH:$HADOOP_INSTALL/sbin export HADOOP_MAPRED_HOME=$HADOOP_INSTALL export HADOOP_COMMON_HOME=$HADOOP_INSTALL export HADOOP_HDFS_HOME=$HADOOP_INSTALL export YARN_HOME=$HADOOP_INSTALL export HADOOP_HOME=$HADOOP_COMMON_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_INSTALL/lib/native export HADOOP_OPTS="-Djava.library.path=$HADOOP_INSTALL/lib"

- /usr/local/hadoop/etc/hadoop/hadoop-env.sh

Set JAVA_HOME in hadoop-env.sh modifying hadoop-env.sh file:

export JAVA_HOME=/usr/lib/jvm/java-7-openjdk-amd64Comment the following line:

#export JAVA_HOME=${JAVA_HOME}Adding the above statement in the hadoop-env.sh file ensures that the value of JAVA_HOME variable will be available to Hadoop whenever it is started up.

- /usr/local/hadoop/etc/hadoop/core-site.xml

The /usr/local/hadoop/etc/hadoop/core-site.xml file contains configuration properties that Hadoop uses when starting up. This file can be used to override the default settings that Hadoop starts with:

sudo mkdir -p /app/hadoop/tmp sudo chown cbuser:hadoop /app/hadoop/tmpOpen the file and enter the following in between the <configuration></configuration> tag:

<configuration> <property> <name>hadoop.tmp.dir</name> <value>/app/hadoop/tmp</value> <description>A base for other temporary directories.</description> </property> <property> <name>fs.default.name</name> <value>hdfs://localhost:54310</value> <description>The name of the default file system. A URI whose scheme and authority determine the FileSystem implementation. The uri's scheme determines the config property (fs.SCHEME.impl) naming the FileSystem implementation class. The uri's authority is used to determine the host, port, etc. for a filesystem.</description> </property> </configuration>Make sure that <configuration> tag is not duplicated.

- /usr/local/hadoop/etc/hadoop/mapred-site.xml

By default, the /usr/local/hadoop/etc/hadoop/ folder contains the /usr/local/hadoop/etc/hadoop/mapred-site.xml.template file which has to be renamed/copied with the name mapred-site.xml:

cp /usr/local/hadoop/etc/hadoop/mapred-site.xml.template /usr/local/hadoop/etc/hadoop/mapred-site.xmlThe mapred-site.xml file is used to specify which framework is being used for MapReduce. We need to enter the following content in between the <configuration></configuration> tag:

<configuration> <property> <name>mapred.job.tracker</name> <value>localhost:54311</value> <description>The host and port that the MapReduce job tracker runs at. If "local", then jobs are run in-process as a single map and reduce task. </description> </property> </configuration>Make sure that <configuration> tag is not duplicated.

- /usr/local/hadoop/etc/hadoop/hdfs-site.xml

The /usr/local/hadoop/etc/hadoop/hdfs-site.xml file needs to be configured for each host in the cluster that is being used. It is used to specify the directories which will be used as the namenode and the datanode on that host.

Before editing this file, we need to create two directories which will contain the namenode and the datanode for this Hadoop installation. This can be done using the following commands:

sudo mkdir -p /usr/local/hadoop_store/hdfs/namenode sudo mkdir -p /usr/local/hadoop_store/hdfs/datanode sudo chown -R cbuser:hadoop /usr/local/hadoop_storeOpen the file and enter the following content in between the <configuration></configuration> tag:

<configuration> <property> <name>dfs.replication</name> <value>3</value> <description>Default block replication. The actual number of replications can be specified when the file is created. The default is used if replication is not specified in create time. </description> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/usr/local/hadoop_store/hdfs/namenode</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/usr/local/hadoop_store/hdfs/datanode</value> </property> </configuration>Source the bashrc file and ensure that IP address and hostname of this machine is present in /etc/hosts file:

source ~/.bashrc sudo vi /etc/hosts IPADDR HOSTNAMEFormat the new Hadoop file system. If IP address and hostname are not present, the formatting of the file system will fail:

hdfs namenode -format cbuser@cb-speccloud-hadoop-271:~$ hdfs namenode -format 15/11/09 19:51:23 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = cb-speccloud-hadoop-271/9.47.240.246 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 2.7.1 STARTUP_MSG: classpath = /usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr305-3.0.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-client-2.7.1.jar:/usr/local/hadoop/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/local/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-recipes-2.7.1.jar:/usr/local/hadoop/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/local/hadoop/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/htrace-core-3.1.0-incubating.jar:/usr/local/hadoop/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-api-1.7.10.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/local/hadoop/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/local/hadoop/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-annotations-2.7.1.jar:/usr/local/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/junit-4.11.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-collections-3.2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-framework-2.7.1.jar:/usr/local/hadoop/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/local/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-auth-2.7.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop/share/hadoop/common/hadoop-nfs-2.7.1.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.7.1-tests.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.7.1.jar:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-all-4.0.23.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/htrace-core-3.1.0-incubating.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.7.1.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.7.1-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-2.7.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jsr305-3.0.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/activation-1.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-collections-3.2.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/yarn/lib/zookeeper-3.4.6-tests.jar:/usr/local/hadoop/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.7.1.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-2.7.1.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-registry-2.7.1.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-client-2.7.1.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-common-2.7.1.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.7.1.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.7.1.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.7.1.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.7.1.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-2.7.1.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.7.1.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-2.7.1.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-api-2.7.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hadoop-annotations-2.7.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.7.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.7.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.7.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.1-tests.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.7.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.7.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.7.1.jar:/usr/local/hadoop/contrib/capacity-scheduler/*.jar STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r 15ecc87ccf4a0228f35af08fc56de536e6ce657a; compiled by 'jenkins' on 2015-06-29T06:04Z STARTUP_MSG: java = 1.7.0_85 ************************************************************/ 15/11/09 19:51:23 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 15/11/09 19:51:23 INFO namenode.NameNode: createNameNode [-format] 15/11/09 19:51:24 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Formatting using clusterid: CID-3f4591aa-1384-445c-afb1-c449690028b3 15/11/09 19:51:24 INFO namenode.FSNamesystem: No KeyProvider found. 15/11/09 19:51:24 INFO namenode.FSNamesystem: fsLock is fair:true 15/11/09 19:51:24 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000 15/11/09 19:51:24 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 15/11/09 19:51:24 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 15/11/09 19:51:24 INFO blockmanagement.BlockManager: The block deletion will start around 2015 Nov 09 19:51:24 15/11/09 19:51:24 INFO util.GSet: Computing capacity for map BlocksMap 15/11/09 19:51:24 INFO util.GSet: VM type = 64-bit 15/11/09 19:51:24 INFO util.GSet: 2.0% max memory 889 MB = 17.8 MB 15/11/09 19:51:24 INFO util.GSet: capacity = 2^21 = 2097152 entries 15/11/09 19:51:24 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false 15/11/09 19:51:24 INFO blockmanagement.BlockManager: defaultReplication = 3 15/11/09 19:51:24 INFO blockmanagement.BlockManager: maxReplication = 512 15/11/09 19:51:24 INFO blockmanagement.BlockManager: minReplication = 1 15/11/09 19:51:24 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 15/11/09 19:51:24 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false 15/11/09 19:51:24 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000 15/11/09 19:51:24 INFO blockmanagement.BlockManager: encryptDataTransfer = false 15/11/09 19:51:24 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 15/11/09 19:51:24 INFO namenode.FSNamesystem: fsOwner = cbuser (auth:SIMPLE) 15/11/09 19:51:24 INFO namenode.FSNamesystem: supergroup = supergroup 15/11/09 19:51:24 INFO namenode.FSNamesystem: isPermissionEnabled = true 15/11/09 19:51:24 INFO namenode.FSNamesystem: HA Enabled: false 15/11/09 19:51:24 INFO namenode.FSNamesystem: Append Enabled: true 15/11/09 19:51:24 INFO util.GSet: Computing capacity for map INodeMap 15/11/09 19:51:24 INFO util.GSet: VM type = 64-bit 15/11/09 19:51:24 INFO util.GSet: 1.0% max memory 889 MB = 8.9 MB 15/11/09 19:51:24 INFO util.GSet: capacity = 2^20 = 1048576 entries 15/11/09 19:51:24 INFO namenode.FSDirectory: ACLs enabled? false 15/11/09 19:51:24 INFO namenode.FSDirectory: XAttrs enabled? true 15/11/09 19:51:24 INFO namenode.FSDirectory: Maximum size of an xattr: 16384 15/11/09 19:51:24 INFO namenode.NameNode: Caching file names occuring more than 10 times 15/11/09 19:51:24 INFO util.GSet: Computing capacity for map cachedBlocks 15/11/09 19:51:24 INFO util.GSet: VM type = 64-bit 15/11/09 19:51:24 INFO util.GSet: 0.25% max memory 889 MB = 2.2 MB 15/11/09 19:51:24 INFO util.GSet: capacity = 2^18 = 262144 entries 15/11/09 19:51:24 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 15/11/09 19:51:24 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0 15/11/09 19:51:24 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000 15/11/09 19:51:24 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10 15/11/09 19:51:24 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10 15/11/09 19:51:24 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25 15/11/09 19:51:24 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 15/11/09 19:51:24 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 15/11/09 19:51:24 INFO util.GSet: Computing capacity for map NameNodeRetryCache 15/11/09 19:51:24 INFO util.GSet: VM type = 64-bit 15/11/09 19:51:24 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB 15/11/09 19:51:24 INFO util.GSet: capacity = 2^15 = 32768 entries Re-format filesystem in Storage Directory /usr/local/hadoop_store/hdfs/namenode ? (Y or N) y 15/11/09 19:51:29 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1085748319-9.47.240.246-1447098689835 15/11/09 19:51:30 INFO common.Storage: Storage directory /usr/local/hadoop_store/hdfs/namenode has been successfully formatted. 15/11/09 19:51:30 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 15/11/09 19:51:30 INFO util.ExitUtil: Exiting with status 0 15/11/09 19:51:30 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at cb-speccloud-hadoop-271/9.47.240.246 ************************************************************/

Get HiBench from kit:

mv ~/KITDIR/workloads/hibench ~/HiBench

Remove the IP address and hostname added to /etc/hosts. Take a snapshot of this VM. The image is ready to be used with the benchmark. It contains CBTOOL depenencies, Hadoop 2.7.1, HiBench 2.0, and Mahout 0.7.

(x86_64/CentOS/7.1/QCOW2) - Common workload image¶

CBTOOL github wiki provides instructions on how to prepare a workload image for your cloud:

https://github.com/ibmcb/cbtool/wiki/HOWTO:-Preparing-a-VM-to-be-used-with-CBTOOL-on-a-real-cloud

The instructions below can be used to prepare a base qcow2 image for Cassandra or KMeans workloads.

Download an centos 7.1 image:

wget http://cloud.centos.org/centos/7/images/CentOS-7-x86_64-GenericCloud.qcow2Upload the image in your cloud. The instructions for uploading the image are specific to each cloud.

Ex: OpenStack cloud Image-creation:

glance image-create --name cb_speccloud_kmeans_centos --disk-format qcow2 --container-format bare --is-public True --file /home/CentOS-7-x86_64-GenericCloud.qcow2Boot VM:

nova boot --image cb_speccloud_kmeans_centos --flavor 3 --nic net-id=623f3faa-66e2-4023-9dee-777eb81c1cc3 --availability-zone nova:kvmhost centos-kmeans --key_name mykeystart VM:

ssh -i YOURKEY.PEM centos@yourVMIPAdd IP address and hostname of your VM in /etc/hosts file:

vi /etc/hosts IPADDR HOSTNAMEUpdate with latest packages and git:

sudo yum update sudo yum install git -y

Create a cbuser account:

sudo adduser cbuser Set password as cbuser

Give it password-less access:

sudo visudo Add the following line. cbuser ALL=(ALL:ALL) NOPASSWD: ALL

Switch to this user:

su cbuser (password should be cbuser) cd ~/

unzip the kit and move osgcloud into home directory:

cd ~/ unzip spec_cloud_iaas*.zip mv spec_cloud_iaas*/osgcloud ~/

if you are using osgclog git instead of kit:

cd ~/ mv osgcloud ~/

Add the key from CBTOOL into the authorized_keys file of this VM.

To avoid permissions vagaries for .ssh directory, generate SSH key. The newly created public and private keys will be discarded when they are overwritten with CBTOOL keys:

ssh-keygen [press ENTER for all options]The above command will create the following directory and add id_rsa and id_rsa.pub keys into it. Now copy keys from CBTOOL machine which are present in the following directories on CBTOOL machine:

cd ~/osgcloud/cbtool/credentials ls cbtool_rsa cbtool_rsa.pubCopy these keys into .ssh directory of this VM:

cat cbtool_rsa > ~/.ssh/id_rsa cat cbtool_rsa.pub > ~/.ssh/id_rsa.pub cat cbtool_rsa.pub >> ~/.ssh/authorized_keysAdjust the permissions:

chmod 400 ~/.ssh/id_rsa chmod 400 ~/.ssh/id_rsa.pub chmod 400 ~/.ssh/authorized_keys

Test ssh connectivity to your VM from cbtool:

ssh -i /home/ubuntu/osgcloud/cbtool/credentials/cbtool_rsa cbuser@YOURVMIP

If this works, cloudbench can do a key-based ssh in this VM.

Search for UseDNS in /etc/ssh/sshd_config. If it does not exist, insert the following line in /etc/ssh/sshd_config:

vi /etc/ssh/sshd_config #UseDNS no

Install null workload. This will install all CBTOOL dependencies for the workload image. Then you can install the workloads by following platform specific instructions:

cd /home/cbuser/osgcloud/ cbtool/install -r workload --wks nullworkload

15. Remove the hostname added in /etc/hosts and take a snapshot of this VM. The snapshot instructions vary per cloud. You can then use this image to prepare workload images.

(x86_64/CentOS/7.1/QCOW2) - Cassandra and YCSB¶

These instructions assume that you are starting with the common workload image created earlier.

Cassandra and YCSB are installed on the same machine.

- Get centos common image created in (x86_64/CentOS/7.1/QCOW2) - Common workload image section and create a vm.

Installing Cassandra¶

Cassandra 2.1.11 package can be obtained from the kit or from datastax site. The rpm package that ships with the kit can be found at:

~/osgcloud/workloads/cassandra/ If user need packages from datastax site, update repo and install:: [cbuser@centos-cassandra ~]$ sudo vim /etc/yum.repos.d/datastax.repo [datastax] name = DataStax Repo for Apache Cassandra baseurl = http://rpm.datastax.com/community enabled = 1 gpgcheck = 0 Install 2.1.11 package:: [cbuser@centos-cassandra ~]$ sudo yum install -y cassandra22-tools-2.1.11.noarch cassandra22-2.1.11.noarch If user need packages shipped from kit:: casssandra and cassandra-tools rpms available here cd ~/workloads/cassandra Install packages:: [cbuser@centos-cassandra ~]$ sudo yum yum install -y cassandra* Start Service:: sudo systemctl start cassandra sudo systemctl status cassandra sudo systemctl enable cassandra

Installing YCSB¶

YCSB ships as part of the kit. It is present in the following directory in the kit:

~/workloads/ycsb/ycsb-0.4.0.tar.gz

Uncompress the file:

tar -xzvf ~/workloads/ycsb/ycsb-0.4.0.tar.gz

Move to home directory and rename:

mv ~/workloads/ycsb/ycsb-0.4.0 ~/YCSB

Capture the VM using your cloud capture tools (snapshot etc) Same image can be used to prepare cassandra & kmeans images.

(x86_64/CentOS/7.1/QCOW2) - KMeans and Hadoop¶

These instructions assume that you are starting with the common workload image created earlier.

The instructions below specify how to set up Hadoop (2.7.1) and how to install HiBench and Mahout that ship with the kit.

Acknowledgements:

http://tecadmin.net/setup-hadoop-2-4-single-node-cluster-on-linux/

Get centos common image created in (x86_64/CentOS/7.1/QCOW2) - Common workload image section and create a vm.

ssh into your VM:

ssh -i YOURKEYPEM cbuser@YOURVMIP Make use that YOURKEYPEM will be common for both YCSB and K-Means VM images.

Install Java:

sudo yum install java-1.7.0-openjdk-1.7.0.91-2.6.2.1.el7_1.x86_64 java version "1.7.0_91" OpenJDK Runtime Environment (rhel-2.6.2.1.el7_1-x86_64 u91-b00) OpenJDK 64-Bit Server VM (build 24.91-b01, mixed mode)

Create hadoop group:

sudo groupadd hadoop

Add cbuser to hadoop group:

sudo usermod -a -G hadoop cbuser

Test ssh to localhost is working without password:

ssh localhost

If this does not work, add your public key to /home/cbuser/.ssh/authorized_keys file.

Get Hadoop 2.7.1 from kit or download from Apache website:

cd /home/cbuser From kit cp workloads/hadoop/hadoop-2.7.1.tar.gz . From website wget http://mirror.symnds.com/software/Apache/hadoop/common/hadoop-2.7.1/hadoop-2.7.1.tar.gz tar -xzvf hadoop-2.7.1.tar.gz

Move hadoop to /usr/local/hadoop directory:

sudo mv hadoop-2.7.1 /usr/local/hadoop sudo chown -R cbuser:hadoop /usr/local/hadoop ls -al /usr/local/hadoop total 44 drwxr-xr-x. 9 cbuser hadoop 4096 Nov 13 2014 . drwxr-xr-x. 14 root root 4096 Sep 15 20:31 .. drwxr-xr-x. 2 cbuser hadoop 4096 Nov 13 2014 bin drwxr-xr-x. 3 cbuser hadoop 19 Nov 13 2014 etc drwxr-xr-x. 2 cbuser hadoop 101 Nov 13 2014 include drwxr-xr-x. 3 cbuser hadoop 19 Nov 13 2014 lib drwxr-xr-x. 2 cbuser hadoop 4096 Nov 13 2014 libexec -rw-r--r--. 1 cbuser hadoop 15429 Nov 13 2014 LICENSE.txt -rw-r--r--. 1 cbuser hadoop 101 Nov 13 2014 NOTICE.txt -rw-r--r--. 1 cbuser hadoop 1366 Nov 13 2014 README.txt drwxr-xr-x. 2 cbuser hadoop 4096 Nov 13 2014 sbin drwxr-xr-x. 4 cbuser hadoop 29 Nov 13 2014 share

Setup configuration¶

Instructions for setup:

- Add environment variables in ~/.bashrc

Before editing the .bashrc file in our home directory, we need to find the path where Java has been installed to set the JAVA_HOME environment variable using the following command:

sudo update-alternatives --config java For example: [cbuser@centos-kmeans ~]$ sudo sudo update-alternatives --config java There is 1 program which provide 'java'. Selection Command ----------------------------------------------- +1 /usr/lib/jvm/java-1.7.0-openjdk-1.7.0.85-2.6.1.2.el7_1.x86_64/jre/bin/java Enter to keep the current selection[+], or type selection number: 1 Now we can append the following to the end of ~/.bashrc:: export JAVA_HOME=/usr/lib/jvm/java-1.7.0-openjdk-1.7.0.85-2.6.1.2.el7_1.x86_64 export HADOOP_INSTALL=/usr/local/hadoop export PATH=$PATH:$HADOOP_INSTALL/bin export PATH=$PATH:$HADOOP_INSTALL/sbin export HADOOP_MAPRED_HOME=$HADOOP_INSTALL export HADOOP_COMMON_HOME=$HADOOP_INSTALL export HADOOP_HDFS_HOME=$HADOOP_INSTALL export YARN_HOME=$HADOOP_INSTALL export HADOOP_HOME=$HADOOP_COMMON_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_INSTALL/lib/native export HADOOP_OPTS="-Djava.library.path=$HADOOP_INSTALL/lib"

- /usr/local/hadoop/etc/hadoop/hadoop-env.sh

Set JAVA_HOME in hadoop-env.sh modifying hadoop-env.sh file:

export JAVA_HOME=/usr/lib/jvm/java-1.7.0-openjdk-1.7.0.85-2.6.1.2.el7_1.x86_64Adding the above statement in the hadoop-env.sh file ensures that the value of JAVA_HOME variable will be available to Hadoop whenever it is started up.

- /usr/local/hadoop/etc/hadoop/core-site.xml

The /usr/local/hadoop/etc/hadoop/core-site.xml file contains configuration properties that Hadoop uses when starting up. This file can be used to override the default settings that Hadoop starts with:

sudo mkdir -p /app/hadoop/tmp sudo chown cbuser:hadoop /app/hadoop/tmpOpen the file and enter the following in between the <configuration></configuration> tag:

<configuration> <property> <name>hadoop.tmp.dir</name> <value>/app/hadoop/tmp</value> <description>A base for other temporary directories.</description> </property> <property> <name>fs.default.name</name> <value>hdfs://localhost:54310</value> <description>The name of the default file system. A URI whose scheme and authority determine the FileSystem implementation. The uri's scheme determines the config property (fs.SCHEME.impl) naming the FileSystem implementation class. The uri's authority is used to determine the host, port, etc. for a filesystem.</description> </property> </configuration>

- /usr/local/hadoop/etc/hadoop/mapred-site.xml

By default, the /usr/local/hadoop/etc/hadoop/ folder contains the /usr/local/hadoop/etc/hadoop/mapred-site.xml.template file which has to be renamed/copied with the name mapred-site.xml:

cp /usr/local/hadoop/etc/hadoop/mapred-site.xml.template /usr/local/hadoop/etc/hadoop/mapred-site.xmlThe mapred-site.xml file is used to specify which framework is being used for MapReduce. We need to enter the following content in between the <configuration></configuration> tag:

<configuration> <property> <name>mapred.job.tracker</name> <value>localhost:54311</value> <description>The host and port that the MapReduce job tracker runs at. If "local", then jobs are run in-process as a single map and reduce task. </description> </property> </configuration>

- /usr/local/hadoop/etc/hadoop/hdfs-site.xml

The /usr/local/hadoop/etc/hadoop/hdfs-site.xml file needs to be configured for each host in the cluster that is being used. It is used to specify the directories which will be used as the namenode and the datanode on that host.

Before editing this file, we need to create two directories which will contain the namenode and the datanode for this Hadoop installation. This can be done using the following commands:

sudo mkdir -p /usr/local/hadoop_store/hdfs/namenode sudo mkdir -p /usr/local/hadoop_store/hdfs/datanode sudo chown -R cbuser:hadoop /usr/local/hadoop_storeOpen the file and enter the following content in between the <configuration></configuration> tag:

<configuration> <property> <name>dfs.replication</name> <value>3</value> <description>Default block replication. The actual number of replications can be specified when the file is created. The default is used if replication is not specified in create time. </description> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/usr/local/hadoop_store/hdfs/namenode</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/usr/local/hadoop_store/hdfs/datanode</value> </property> </configuration>

Format the new Hadoop file system:

hadoop namenode -format

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

15/09/27 16:55:03 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = centos-kmeans.novalocal/192.168.122.213

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.6.0

STARTUP_MSG: classpath = /usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-collections-3.2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/local/hadoop/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-auth-2.6.0.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-annotations-2.6.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoop/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/usr/local/hadoop/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/local/hadoop/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-client-2.6.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-framework-2.6.0.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/local/hadoop/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/common/lib/junit-4.11.jar:/usr/local/hadoop/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/local/hadoop/share/hadoop/common/lib/htrace-core-3.0.4.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-el-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/local/hadoop/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/local/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/local/hadoop/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/local/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.6.0-tests.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.6.0.jar:/usr/local/hadoop/share/hadoop/common/hadoop-nfs-2.6.0.jar:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-el-1.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.0.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.6.0-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-collections-3.2.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jline-0.9.94.jar:/usr/local/hadoop/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/local/hadoop/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/activation-1.1.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-api-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-client-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-registry-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-common-2.6.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0-tests.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.0.jar:/usr/local/hadoop/contrib/capacity-scheduler/*.jar:/usr/local/hadoop/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r e3496499ecb8d220fba99dc5ed4c99c8f9e33bb1; compiled by 'jenkins' on 2014-11-13T21:10Z

STARTUP_MSG: java = 1.7.0_85

************************************************************/

15/09/27 16:55:03 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

15/09/27 16:55:04 INFO namenode.NameNode: createNameNode [-format]

15/09/27 16:55:04 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Formatting using clusterid: CID-d4c6083f-48cd-4954-9960-376716496d42

15/09/27 16:55:05 INFO namenode.FSNamesystem: No KeyProvider found.

15/09/27 16:55:05 INFO namenode.FSNamesystem: fsLock is fair:true

15/09/27 16:55:05 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

15/09/27 16:55:05 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

15/09/27 16:55:05 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

15/09/27 16:55:05 INFO blockmanagement.BlockManager: The block deletion will start around 2015 Sep 27 16:55:05

15/09/27 16:55:05 INFO util.GSet: Computing capacity for map BlocksMap

15/09/27 16:55:05 INFO util.GSet: VM type = 64-bit

15/09/27 16:55:05 INFO util.GSet: 2.0% max memory 966.7 MB = 19.3 MB

15/09/27 16:55:05 INFO util.GSet: capacity = 2^21 = 2097152 entries

15/09/27 16:55:05 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

15/09/27 16:55:05 INFO blockmanagement.BlockManager: defaultReplication = 3

15/09/27 16:55:05 INFO blockmanagement.BlockManager: maxReplication = 512

15/09/27 16:55:05 INFO blockmanagement.BlockManager: minReplication = 1

15/09/27 16:55:05 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

15/09/27 16:55:05 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false

15/09/27 16:55:05 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

15/09/27 16:55:05 INFO blockmanagement.BlockManager: encryptDataTransfer = false

15/09/27 16:55:05 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

15/09/27 16:55:05 INFO namenode.FSNamesystem: fsOwner = cbuser (auth:SIMPLE)

15/09/27 16:55:05 INFO namenode.FSNamesystem: supergroup = supergroup

15/09/27 16:55:05 INFO namenode.FSNamesystem: isPermissionEnabled = true

15/09/27 16:55:05 INFO namenode.FSNamesystem: HA Enabled: false

15/09/27 16:55:05 INFO namenode.FSNamesystem: Append Enabled: true

15/09/27 16:55:05 INFO util.GSet: Computing capacity for map INodeMap

15/09/27 16:55:05 INFO util.GSet: VM type = 64-bit

15/09/27 16:55:05 INFO util.GSet: 1.0% max memory 966.7 MB = 9.7 MB

15/09/27 16:55:05 INFO util.GSet: capacity = 2^20 = 1048576 entries

15/09/27 16:55:05 INFO namenode.NameNode: Caching file names occuring more than 10 times

15/09/27 16:55:05 INFO util.GSet: Computing capacity for map cachedBlocks

15/09/27 16:55:05 INFO util.GSet: VM type = 64-bit

15/09/27 16:55:05 INFO util.GSet: 0.25% max memory 966.7 MB = 2.4 MB

15/09/27 16:55:05 INFO util.GSet: capacity = 2^18 = 262144 entries

15/09/27 16:55:05 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

15/09/27 16:55:05 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

15/09/27 16:55:05 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

15/09/27 16:55:05 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

15/09/27 16:55:05 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

15/09/27 16:55:05 INFO util.GSet: Computing capacity for map NameNodeRetryCache

15/09/27 16:55:05 INFO util.GSet: VM type = 64-bit

15/09/27 16:55:05 INFO util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB

15/09/27 16:55:05 INFO util.GSet: capacity = 2^15 = 32768 entries

15/09/27 16:55:05 INFO namenode.NNConf: ACLs enabled? false

15/09/27 16:55:05 INFO namenode.NNConf: XAttrs enabled? true

15/09/27 16:55:05 INFO namenode.NNConf: Maximum size of an xattr: 16384

15/09/27 16:59:34 INFO namenode.FSImage: Allocated new BlockPoolId: BP-798775859-192.168.122.213-1443373174722

15/09/27 16:59:34 INFO common.Storage: Storage directory /usr/local/hadoop_store/hdfs/namenode has been successfully formatted.

15/09/27 16:59:35 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

15/09/27 16:59:35 INFO util.ExitUtil: Exiting with status 0

15/09/27 16:59:35 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at centos-kmeans.novalocal/192.168.122.213

************************************************************/

[cbuser@centos-kmeans ~]$

Get HiBench from kit:

mv ~/osgcloud/workloads/hibench ~/HiBench

Remove the IP address and hostname added to /etc/hosts. Take a snapshot of this VM. The image is ready to be used with the benchmark. It contains CBTOOL depenencies, Hadoop 2.6.0, HiBench 2.0, and Mahout 0.7.

(ppc_64/Ubuntu/Trusty/QCOW2) - Common workload image¶

Instructions same as x86_64 Ubuntu image.

(ppc_64/Ubuntu/Trusty/QCOW2) - Cassandra and YCSB¶

Instructions same as Ubuntu x86_64 image.

(ppc_64/Ubuntu/Trusty/QCOW2) - KMeans and Hadoop¶

Instructions same as Ubuntu x86_64 image.

(AMI_x86_64/Ubuntu/Trusty/QCOW2) - Common workload image¶

CBTOOL github wiki provides instructions on how to prepare a workload image for your cloud:

https://github.com/ibmcb/cbtool/wiki/HOWTO:-Preparing-a-VM-to-be-used-with-CBTOOL-on-a-real-cloud

The instructions below can be used to prepare a base qcow2 image for Cassandra or KMeans workloads.

Create a VM using Ubuntu 14.04 image with a flavor m3.medium (1 VCPU, 7.5GB)

Determine the IP address of VM using ifconfig, and add IP address and hostname of your VM in /etc/hosts file:

vi /etc/hosts IPADDR HOSTNAME

Update with latest packages and git:

sudo apt-get update sudo apt-get install git -y

Create a cbuser account:

sudo adduser cbuser Set appropriate password

Give it password-less access:

sudo visudo Add the following line. cbuser ALL=(ALL:ALL) NOPASSWD: ALL

Switch to this user:

su cbuser (password should be cbuser) cd ~/

Unzip the kit and move osgcloud into home directory:

cd ~/ unzip spec_cloud_iaas*.zip mv spec_cloud_iaas*/osgcloud ~/

Add the key from CBTOOL into the authorized_keys file of this VM.:

sudo cp -r /home/ubuntu/.ssh ~/ sudo chown -R cbuser:cbuser ~/.ssh

Install NTP package:

sudo apt-get install ntp -y

Test ssh connectivity to your VM from cbtool:

ssh -i /home/ubuntu/osgcloud/cbtool/credentials/cbtool_rsa cbuser@YOURVMIP

If this works, cloudbench can do a key-based ssh in this VM.

Search for “UseDNS” in /etc/ssh/sshd_config. If it does not exist, insert the following line in /etc/ssh/sshd_config:

vi /etc/ssh/sshd_config UseDNS no sudo service ssh restart

Check /home/cbuser/.ssh/config

If it containers a line:

-e UserKnownHostsFile=/dev/null

Remove the “-e ” and save the file.

The contents of this file should be same as:

StrictHostKeyChecking=no

UserKnownHostsFile=/dev/null

13. Install null workload. This will install all CBTOOL dependencies for the workload image. Then you can install the workloads by following platform specific instructions:

cd /home/cbuser/osgcloud/

cbtool/install -r workload --wks nullworkload

If there are any errors, rerun the command until it exits without any errors. A successful output should return the following:

“All dependencies are in place”

13. Remove the hostname added in /etc/hosts and take a snapshot of this VM. The snapshot instructions vary per cloud. You can then use this image to prepare workload images.

(AMI_x86_64/Ubuntu/Trusty/QCOW2) - Cassandra and YCSB¶

Instructions same as Ubuntu

(AMI_x86_64/Ubuntu/Trusty/QCOW2) KMeans and Hadoop¶

Instructions same as Ubuntu x86_64 image.

(gcloud_x86_64/Ubuntu/Trusty/QCOW2) - Common workload image¶

CBTOOL github wiki provides instructions on how to prepare a workload image for your cloud:

https://github.com/ibmcb/cbtool/wiki/HOWTO:-Preparing-a-VM-to-be-used-with-CBTOOL-on-a-real-cloud

The instructions below can be used to prepare a base GCE image for Cassandra or KMeans workloads.

Create a VM using Ubuntu 14.04 image with a flavor m3.medium (1 VCPU, 7.5GB).Click on ‘Disks’ and uncheck the ‘Delete boot disk when instance is deleted’.

Determine the IP address of VM using ifconfig, and add IP address and hostname of your VM in /etc/hosts file:

vi /etc/hosts IPADDR HOSTNAME

Update with latest packages and git:

sudo apt-get update sudo apt-get install unzip git -y

Copy the kit into this instance, unzip the kit and move osgcloud into home directory:

cd ~/ unzip spec_cloud_iaas*.zip mv spec_cloud_iaas*/osgcloud ~/

Install ntp package:

sudo apt-get install ntp -y

Test ssh connectivity to your VM from cbtool:

ssh -i /home/ubuntu/osgcloud/cbtool/credentials/cbtool_rsa cbuser@YOURVMIP

If this works, cloudbench can do a key-based ssh in this VM.

Search for UseDNS in /etc/ssh/sshd_config. If it does not exist, insert the following line in /etc/ssh/sshd_config:

vi /etc/ssh/sshd_config UseDNS no sudo service ssh restart

Check /home/cbuser/.ssh/config

If it containers a line:

-e UserKnownHostsFile=/dev/null

Remove the “-e ” and save the file.

The contents of this file should be same as:

StrictHostKeyChecking=no

UserKnownHostsFile=/dev/null

9. Install null workload. This will install all CBTOOL dependencies for the workload image. Then you can install the workloads by following platform specific instructions:

cd /home/cbuser/osgcloud/

cbtool/install -r workload --wks nullworkload

If there are any errors, rerun the command until it exits without any errors. A successful output should return the following:

“All dependencies are in place”

10. Remove the hostname added in /etc/hosts and take a snapshot of this VM. The snapshot instructions vary per cloud. You can then use this image to prepare workload images.

Delete the VM.

Create an image from the disk of this deleted VM through UI or command line. Command line instructions are as follows:

gcloud compute images create NAMEOFIMAGE --source-disk-zone us-central1-f --source-disk NAMEOFDELETEDVM

(gcloud/x86_64/Ubuntu/Trusty) - Cassandra and YCSB¶

Instructions same as Ubuntu.

(gcloud/x86_64/Ubuntu/Trusty) - KMeans and Hadoop¶

Instructions same as Ubuntu.

Upload Images in Your Cloud, Launch VM, and Launch AI¶

It is assumed that a tester knows how to upload images in the cloud under test. Sample instructions for an OpenStack cloud are provided below.

[For development only] YCSB and K-Means images (centos & ubuntu) are available on SPEC Miami repository. The tester can download these images:

centos:

https://spec.cs.miami.edu/private/osg/cloud/images/latest/cb_speccloud_cassandra_centos.qcow2

https://spec.cs.miami.edu/private/osg/cloud/images/latest/cb_speccloud_kmeans_hadoop_271_centos.qcow2

ubuntu:

wget https://spec.cs.miami.edu/private/osg/cloud/images/latest/cb_speccloud_cassandra_2111.qcow2

wget https://spec.cs.miami.edu/private/osg/cloud/images/latest/cb_speccloud_hadoop_271.qcow2

Assume OpenStack credentials have been sourced, downloaded the cassandra image at the following path on cloud controller:

centos:

/home/ubuntu/images/cb_speccloud_cassandra_centos.qcow2

ubuntu:

/home/ubuntu/images/cb_speccloud_cassandra_2111.qcow2

Upload the image into OpenStack cloud:

centos:

glance image-create --name cb_speccloud_cassandra_centos.qcow2 --disk-format qcow2 --container-format bare --is-public True --file /home/ubuntu/images/cb_speccloud_cassandra_centos.qcow2

ubuntu:

glance image-create --name cb_speccloud_cassandra_2111.qcow2 --disk-format qcow2 --container-format bare --is-public True --file /home/ubuntu/images/cb_speccloud_cassandra_2111.qcow2

Similar steps can be followed to upload the KMeans image.

Configuring CBTOOL with the Images Uploaded in OpenStack Cloud¶

Edit the cloud definitions file and add the [VM_TEMPLATES : OSK_CLOUDCONFIG] after the “ADD BELOW” line:

vi ubuntu_cloud_definitions.txt [USER-DEFINED : CLOUDOPTION_MYOPENSTACK] OSK_ACCESS = http://PUBLICIP:5000/v2.0/ # Address of controlled node (where nova-api runs) OSK_CREDENTIALS = admin-admin-admin # user-password-tenant OSK_SECURITY_GROUPS = default # Make sure that this group exists first OSK_INITIAL_VMCS = RegionOne # Change "RegionOne" accordingly OSK_LOGIN = cbuser # The username that logins on the VMs OSK_KEY_NAME = spec_key # SSH key for logging into workload VMs OSK_SSH_KEY_NAME = spec_key # SSH key for logging into workload VMs OSK_NETNAME = public ADD BELOW [VM_TEMPLATES : OSK_CLOUDCONFIG] # setting various CBTOOL roles and images # the images have to exist in OpenStack glance. #choose required images (centos or ubuntu) and comment other images. # centos images CASSANDRA = size:m1.medium, imageid1:cb_speccloud_cassandra_centos YCSB = size:m1.medium, imageid1:cb_speccloud_cassandra_centos SEED = size:m1.medium, imageid1:cb_speccloud_cassandra_centos HADOOPMASTER = size:m1.medium, imageid1:cb_speccloud_kmeans_centos HADOOPSLAVE = size:m1.medium, imageid1:cb_speccloud_kmeans_centos #ubuntu images CASSANDRA = size:m1.medium, imageid1:cassandra_ubuntu YCSB = size:m1.medium, imageid1:cb_speccloud_cassandra SEED = size:m1.medium, imageid1:cb_speccloud_cassandra HADOOPMASTER = size:m1.medium, imageid1:cb_speccloud_kmeans HADOOPSLAVE = size:m1.medium, imageid1:cb_speccloud_kmeans

Launch a VM and Test It¶

In your CBTOOL CLI, type the following:

centos:

(MYOPENSTACK) cldalter vm_defaults run_generic_scripts=False

(MYOPENSTACK) cldalter MYOPENSTACK ai_defaults dont_start_load_manager True

(MYOPENSTACK) typealter MYOPENSTACK cassandra_ycsb cassandra_conf_path /etc/cassandra/conf/cassandra.yaml

(MYOPENSTACK) vmattach cassandra

ubuntu:

(MYOPENSTACK) cldalter vm_defaults run_generic_scripts=False

vmattach cassandra

This will create a VM from Cassandra image that uploaded earlier. Once the VM is created, test ping connectivity to the VM. CBTOOL will not run any generic scripts into this image. Here is a sample output:

(MYOPENSTACK) vmattach cassandra

status: Starting an instance on OpenStack, using the imageid "cassandra_ubuntu_final" (<Image: cassandra_ubuntu_final>) and size "m1.medium" (<Flavor: m1.medium>), network identifier "[{'net-id': u'24bf3e75-c883-41e6-9ee7-4ca0f16e8fa9'}]", on VMC "RegionOne"

status: Waiting for vm_3 (cloud-assigned uuid a5f1047d-8127-435b-b2f6-10fa96e19fdc) to start...

status: Trying to establish network connectivity to vm_3 (cloud-assigned uuid a5f1047d-8127-435b-b2f6-10fa96e19fdc), on IP address 9.47.240.221...

status: Checking ssh accessibility on vm_3 (cbuser@9.47.240.221)...

status: Boostrapping vm_3 (creating file cb_os_paramaters.txt in "cbuser" user's home dir on 9.47.240.221)...

status: Sending a copy of the code tree to vm_3 (9.47.240.221)...

status: Bypassing generic VM post_boot configuration on vm_3 (9.47.240.221)...

VM object 9440AAF5-B5D9-5FF6-8845-45DBCFBA0270 (named "vm_3") sucessfully attached to this experiment. It is ssh-accessible at the IP address 9.47.240.221 (cb-ubuntu-MYOPENSTACK-vm3-cassandra).

(MYOPENSTACK)

Configure /etc/ntp.conf with the NTP server in your environment and then run:

sudo ntpd -gq

If NTP is not installed in your image, then the image must be recreated with NTP package installed.

If the result of running the above command is zero (echo $?), then the instance can reach the intended NTP server.

Next, restart CBTOOL:

cd ~/osgcloud/cbtool

./cb --hard_reset -c configs/ubuntu_cloud_definitions.txt

Create a VM again:

vmattach cassandra

If VM is successfully created, CBTOOL is able to copy scripts into the VM.

Adjust the number of attemps CBTOOL makes to test if VM is running. This comes handy if the cloud under test takes a long time to provision. This value is automatically set during a compliant run based on maximum of average AI provisioning time measured during baseline phase:

cldalter MYOPENSTACK vm_defaults update_attempts 100

Repeat this process for all role names, that is:

vmattach ycsb

vmattach hadoopmaster

vmattach hadoopslave

Launching Your First AI¶

This instructions assume that CBTOOL has been configured and images have been uploaded in the cloud. If that is not the case, please refer to earlier instructions.

Launch CBTOOL

./cb --hard_reset -c configs/ubuntu_cloud_definitions.txt

Launching YCSB/Cassandra AI¶

Launch your first AI of Cassandra:

centos:

typealter MYOPENSTACK cassandra_ycsb cassandra_conf_path /etc/cassandra/conf/cassandra.yaml

aiattach cassandra_ycsb

ubuntu:

aiattach cassandra_ycsb

This will create a three instance Cassandra cluster, with one instance as YCSB, and two instances as Cassandra seeds. Note that the AI size for YCSB/Cassandra for SPEC Cloud IaaS 2016 Benchmark is seven instances. However, this step is simply a test to verify that YCSB/Cassandra cluster is successfully created.

You will see an output similar to:

(MYOPENSTACK) aiattach cassandra_ycsb

status: Starting an instance on OpenStack, using the imageid "cb_speccloud_cassandra_2111" (<Image: cb_speccloud_cassandra_2111>) and size "m1.large" (<Flavor: m1.large>), connected to networks "flat_net", on VMC "RegionOne", under tenant "default" (ssh key is "root_default_cbtool_rsa")

status: Starting an instance on OpenStack, using the imageid "cb_speccloud_cassandra_2111" (<Image: cb_speccloud_cassandra_2111>) and size "m1.large" (<Flavor: m1.large>), connected to networks "flat_net", on VMC "RegionOne", under tenant "default" (ssh key is "root_default_cbtool_rsa")

status: Starting an instance on OpenStack, using the imageid "cb_speccloud_cassandra_2111" (<Image: cb_speccloud_cassandra_2111>) and size "m1.large" (<Flavor: m1.large>), connected to networks "flat_net", on VMC "RegionOne", under tenant "default" (ssh key is "root_default_cbtool_rsa")

status: Waiting for vm_2 (cloud-assigned uuid 315576a1-7fe4-4ee0-95b3-f78ce5f4a99c) to start...

status: Waiting for vm_3 (cloud-assigned uuid f50645aa-6744-4c70-bb64-672e60551b99) to start...

status: Waiting for vm_1 (cloud-assigned uuid f504cb52-18db-4e6b-b779-2f1038a35400) to start...

status: Trying to establish network connectivity to vm_1 (cloud-assigned uuid f504cb52-18db-4e6b-b779-2f1038a35400), on IP address 10.146.4.117...

status: Trying to establish network connectivity to vm_2 (cloud-assigned uuid 315576a1-7fe4-4ee0-95b3-f78ce5f4a99c), on IP address 10.146.4.116...

status: Trying to establish network connectivity to vm_3 (cloud-assigned uuid f50645aa-6744-4c70-bb64-672e60551b99), on IP address 10.146.4.115...

status: Checking ssh accessibility on vm_2 (cbuser@10.146.4.116)...

status: Checking ssh accessibility on vm_3 (cbuser@10.146.4.115)...

status: Checking ssh accessibility on vm_1 (cbuser@10.146.4.117)...

status: Command "/bin/true" failed to execute on hostname 10.146.4.116 after attempt 0. Will try 3 more times.

status: Command "/bin/true" failed to execute on hostname 10.146.4.115 after attempt 0. Will try 3 more times.

status: Command "/bin/true" failed to execute on hostname 10.146.4.117 after attempt 0. Will try 3 more times.

status: Bootstrapping vm_2 (creating file cb_os_paramaters.txt in "cbuser" user's home dir on 10.146.4.116)...

status: Bootstrapping vm_3 (creating file cb_os_paramaters.txt in "cbuser" user's home dir on 10.146.4.115)...

status: Bootstrapping vm_1 (creating file cb_os_paramaters.txt in "cbuser" user's home dir on 10.146.4.117)...

status: Sending a copy of the code tree to vm_2 (10.146.4.116)...

status: Sending a copy of the code tree to vm_3 (10.146.4.115)...

status: Sending a copy of the code tree to vm_1 (10.146.4.117)...

status: Performing generic application instance post_boot configuration on all VMs belonging to ai_1...

status: Running application-specific "setup" configuration on all VMs belonging to ai_1...

status: QEMU Scraper will NOT be automatically started during the deployment of ai_1...

AI object 3C4CEBCB-A884-58BE-9436-586184D1422B (named "ai_1") sucessfully attached to this experiment. It is ssh-accessible at the IP address 10.146.4.116 (cb-root-MYOPENSTACK-vm2-ycsb-ai-1).

If the AI fails to create because load manager will not run, please restart CloudBench, and type the following:

cd ~/osgcloud/cbtool

./cb --hard_reset

cldalter CLOUDNAME ai_defaults dont_start_load_manager True

aiattach cassandra_ycsb

Then, you can try to manually execute the scripts that are causing problems.

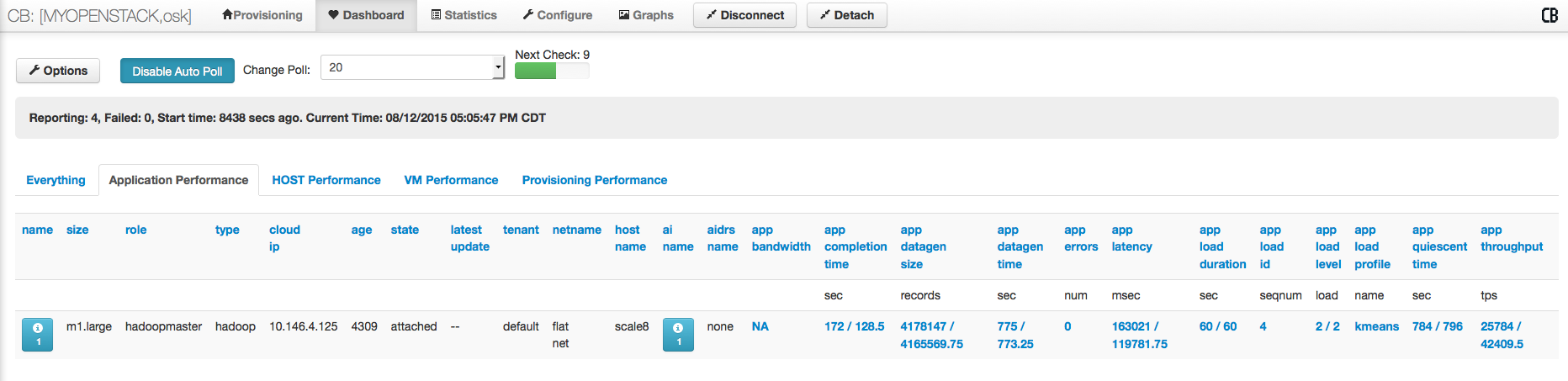

Verify that results are appearing in CBTOOL dashboard. Here is a screenshot.

Launching KMeans/Hadoop AI¶

Now, to launch your first AI of K-Means. These instructions assume that KMeans/Hadoop image has been created following the instructions listed above:

centos:

typealter YOURCLOUDNAME hadoop hadoop_home /usr/local/hadoop

typealter YOURCLOUDNAME hadoop java_home /usr/lib/jvm/java-1.7.0-openjdk-1.7.0.85-2.6.1.2.el7_1.x86_64

typealter YOURCLOUDNAME hadoop dfs_name_dir /usr/local/hadoop_store/hdfs/namenode

typealter YOURCLOUDNAME hadoop dfs_data_dir /usr/local/hadoop_store/hdfs/datanode

ubuntu:

typealter YOURCLOUDNAME hadoop hadoop_home /usr/local/hadoop

typealter YOURCLOUDNAME hadoop java_home /usr/lib/jvm/java-7-openjdk-amd64

typealter YOURCLOUDNAME hadoop dfs_name_dir /usr/local/hadoop_store/hdfs/namenode

typealter YOURCLOUDNAME hadoop dfs_data_dir /usr/local/hadoop_store/hdfs/datanode

aiattach hadoop

After the image is launched, you will see an output similar to the following at the CBTOOL prompt:

(MYOPENSTACK) aiattach hadoop