SPEC SFS®2014_vda Result

Copyright © 2016-2020 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_vda ResultCopyright © 2016-2020 Standard Performance Evaluation Corporation |

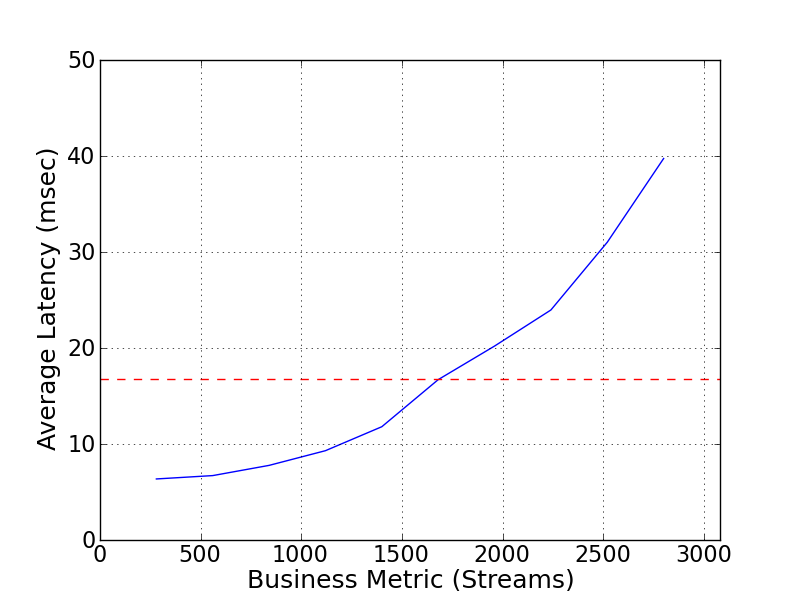

| DATATOM Corp.,Ltd. | SPEC SFS2014_vda = 2800 Streams |

|---|---|

| DATATOM INFINITY | Overall Response Time = 16.72 msec |

|

|

| DATATOM INFINITY | |

|---|---|

| Tested by | DATATOM Corp.,Ltd. | Hardware Available | 04/2020 | Software Available | 04/2020 | Date Tested | 04/2020 | License Number | 6039 | Licensee Locations | Chengdu, China |

INFINITY is a new generation of distributed cluster cloud storage developed by DATATOM. It makes full use of the idea of software-defined storage. It is a unified storage including file, block and object storage. It can also be used as cloud storage for Internet applications and back-end storage for cloud platforms,the cloud platforms that INFINITY supports include VMware, OpenStack and Docker. INFINITY's advantages in on-demand scaling, performance aggregation and data security have made it an ultra-performance cluster storage for a wide range of business applications, and has developed numerous cases for broadcasting, financial , government, education, medical and other industries.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 360 | 4TB 7200RPM SATA HDD | Western Digital | HUS726T4TALE6L4 | Each server's storage data pool consists of 36 disks. The specification of this SATA HDD disk is: size:3.5inch;interface:SATA 6Gb/s;capacity:4TB;speed:7200RPM |

| 2 | 40 | 240GB SATA SSD | Seagate | XA240LE10003 | 40 hard disks in total. 10 of them are used by the storage server nodes (1/node) to store metadata. 20 of them are used by the storage server nodes (2/node) to create raid-1 pairs for the OS. 10 of them are used by the clients (2/node) to create raid-1 pairs for the OS. The specification of this SSD is: size:2.5inch;interface:SATA 6Gb/s;capacity:240GB. |

| 3 | 10 | 800GB NVMe SSD | Intel | P3700 | 1 NVMe SSD resides in each server node,there are 10 server nodes,so the total of NVMe SSD is 10. All of them are used to save storage logs.The specification of this SSD is: interface: PCIe NVMe 3.0 x4; capacity:800GB |

| 4 | 1 | Ethernet Switch | Dell EMC | S4048-ON | 48*10GbE and 6*40GbE. 40*10GbE ports are used to connect storage service nodes,5*40GbE ports are used to connect storage client nodes. |

| 5 | 15 | Chassis | Chenbro | RM41736 Plus | Supports 36 x 3.5" SAS/SATA with front/rear access of high-density storage server.Economic power-consumption cooling: 7 x 8038 hot-swap Fan, 7500RPM. High power efficiency: 1+1 CRPS 1200W 80 PLUS Platinum power supply. |

| 6 | 15 | Motherboard | Supermicro | X10DRL-i | Motherboard models of servers and clients. |

| 7 | 15 | Host Bus Adapter | Broadcom | SAS 9311-8i | There is 1 HBA per server and client node.Data transmission rate:12 GB/s SAS-3;I/O controller:LSI SAS 3008. |

| 8 | 30 | Processor | Intel | E5-2630V4 | There are 2 CPUs per server and client node.Clock speed:2.2 GHz;Turbo clock speed:3.1 GHz;Cores:10;Architecture:x86-64;Threads:20 threads;smart cache per core:25 MB;Max CPUs:2 |

| 9 | 120 | Memory | Micron | MTA36ASF4G72PZ-2G6D1QG | There are 8 x 32GB DIMMs per server and client node.DDR4 functionality and operations supported asdefined in the component data sheet;32GB (4 Gig x 72);288-pin, registered dual in-line memory module(RDIMM);Supports ECC error detection and correction |

| 10 | 5 | 40GbE NIC | Intel | XL710-QDA2 | There is 1 40GbE NIC per client node. Each client node has a generated load and one of the nodes acts as the primary client. |

| 11 | 20 | 10GbE NIC | Intel | X520-DA2 | There are 2 10GbE NICs which include 4 network ports per server node.Each two network ports from different NIC form a dual 10 Gigabit bond. The two bonds are used as the internal data sync and external access network of the storage cluster. |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Storage Server Nodes | INFINITY filesystem | 3.5.0 | INFINITY is a unified storage including file, block and object storage.It adopts a global unified namespace and is internally compatible with variety of NAS protocols,including:CIFS/SMB,NFS,FTP,AFP and WEBDAV. |

| 2 | Storage Server and Client nodes | Operating System | CentOS 7.4 | The operating system on each storage node was 64-bit CentOS Version 7.4. |

| Storage Node | Parameter Name | Value | Description |

|---|---|---|

| block.db | None | Specify the '--block.db' parameter when adding NVMe SSDs. The NVMe SSDs will be used to save storage log information. |

| Network port bond | mod=6 | Every two network ports of the storage server form a bond port with mod = 6. One bond used for external access network and one bond used for data sync network. |

In INFINITY storage system, network performance is increased by network port bonding. For storage, read-write performance is increased by using high-performance data disks. For security, we use SSDs to form RAID 1 to guarantee the safety of the OS. We need to manually configure the storage by INFINITY Management Webpage to ensure the data will be stored in designated storage devices.

| Server Node | Parameter Name | Value | Description |

|---|---|---|

| vm.dirty_writeback_centisecs | 100 | Indicates how often the kernel BDI thread wakes up to check whether the data in the cache needs to be written to disk, in units of 1/100 second. The default is 500, which means that the BDI thread will be woken up every 5 seconds.For a large number of continuous Buffer Write environments, the value should be appropriately reduced so that the BDI thread can more quickly determine whether the flush condition is reached, to avoid the accumulation of excessive dirty data and the formation of peak writes. |

| vm.dirty_expire_centisecs | 100 | After the data in the Linux kernel write buffer is 'old',the kernel BDI thread starts to consider writing to the disk. The unit is 1/100 second. The default is 3000, which means that if the 30-second data is old, the disk will be refreshed.For environments with a large number of continuous Buffer Writes, the value should be appropriately reduced to trigger the BDI flush condition as early as possible, and the original write peak can be smoothly placed through multiple submissions.If the setting is too small, I/O will be submitted too frequently. | Client Node | Parameter Name | Value | Description |

| rsize,wsize | 1048576 | NFS mount options for data block size. |

| protocol | tcp | NFS mount options for protocol. |

| tcp_fin_timeout | 600 | TCP time to wait for final packet before socket closed. |

| nfsvers | 4.1 | NFS mount options for NFS version. |

For the flush mechanism of kernel dirty pages, the simple point is that the kernel BDI thread periodically determines whether there are too old or too many dirty pages. If so, the dirty pages are flushed.These two parameters are located in /etc/sysctl.conf. Used the mount command "mount -t nfs ServerIp:/infinityfs1/nfs /nfs" in the test. The mount information is ServerIp:/infinityfs1/nfs on /nfs type nfs4 (rw,relatime,sync,vers=4.1,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,port=12049,timeo=600,retrans=2,sec=sys,clientaddr=ClientIp,local_lock=none,addr=ServerIp).

There were no opaque services in use.

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | 360 4TB HDDs form the file system data storage pool | duplication | Yes | 1 |

| 2 | Ten 240GB SSDs form a file system metadata storage pool | duplication | Yes | 1 |

| 3 | Two 240GB SSDs form RAID 1 used to store the OS in each server and client node. | RAID-1 | Yes | 15 |

| 4 | Each server node independently uses a NVMe SSD to store log information. | None | No | 10 |

| Number of Filesystems | 1 | Total Capacity | 624.6TiB | Filesystem Type | infinityfs |

|---|

INFINITY provides two data security protection modes, including erasure code and replication modes. Users can choose appropriate data protection strategies according to their business requirements. In this test,we choosed the duplication mode(one of the replication modes), which means that when a piece of data is stored in storage, it is written to two copies at the same time. so the usable capacity is half the capacity of the raw disk.The reserved space holds disk metadata information, so the actual usable space is less than the total disk space. Use the product default parameters when creating the file system.

10 servers form a storage cluster, per storage server provides 36 4TB SATA HDD disks(total is 360 4TB SATA HDD disks in the storage cluster) to form a data storage pool, and per storage server provides 1 240GB SATA SSD(total is 10 240GB SATA SSDs in the storage cluster) to form a metadata storage pool. The two storage pools together form a file system. In addition, a separate 800GB NVMe SSD disk in each storage server node is used to store storage log information, and the log writing speed is improved to improve storage performance.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 10GbE Network | 40 | Each storage server uses four 10GbE network ports, and each two network ports form a dual 10 Gigabit bond,mod=6 |

| 2 | 40GbE Network | 5 | The client uses a 40GbE network port to communicate with the server. |

Each storage server uses four 10GbE network ports, and each two network ports form a dual 10 Gigabit bond. The two bonds are used as the internal data sync and external access network of the storage cluster. The internal network provides communication between the internal nodes of the cluster, and the external network communicates with the client. The clients each use a single 40GbE network port to communicate with the server.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | Dell EMC Networking S4048-ON | 10/40GbE | 54 | 45 | The storage servers use 40 10GbE ports, and the storage client use 5 40GbE ports. |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 20 | CPU | File System Server Nodes | Intel(R) Xeon(R) CPU E5-2630 v4 @ 2.20GHz 10-core | File System Server Nodes |

| 2 | 10 | CPU | File System Client Nodes | Intel(R) Xeon(R) CPU E5-2630 v4 @ 2.20GHz 10-core | File System Client Nodes, load generator |

There are 2 physical processors in each server and client node. Each processor has 10 cores with two thread per core.There is no Spectre/Meltdown patch installed.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| System memory on server node | 256 | 10 | V | 2560 |

| System memory on client node | 256 | 5 | V | 1280 | Grand Total Memory Gibibytes | 3840 |

None

Infinity Cluster Storage does not use any internal memory to temporarily cache write data to the underlying storage system. All writes are committed directly to the storage disk, therefore there is no need for any RAM battery protection. Data is protected on the storage media using replicas of data. In the event of a power failure a write in transit would not be acknowledged.

The storage cluster uses large-capacity SATA HDD disks to form a data storage pool, and high-performance SATA SSD disks to form a metadata storage pool. In addition, each storage service node uses one SSD disk to save storage logs. Adjust the priority of mixed read and write IO according to the IO model to improve cluster storage performance under mixed IO.

None

The storage client node and the server node are connected to the same switch. 10 server nodes use 40 10GbE network ports, 20 of them are used as external service network ports to communicate with clients, and the other 20 are used as internal data network ports to implement communication between service nodes. Five client nodes use five 40GbE network ports, each client generates load, the load is evenly distributed on each client node, and the pressure of traffic load is evenly distributed on the server's 20 network ports.

None

None

Generated on Tue Jun 2 13:59:35 2020 by SpecReport

Copyright © 2016-2020 Standard Performance Evaluation Corporation