SPEC SFS®2014_swbuild Result

Copyright © 2016-2019 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_swbuild ResultCopyright © 2016-2019 Standard Performance Evaluation Corporation |

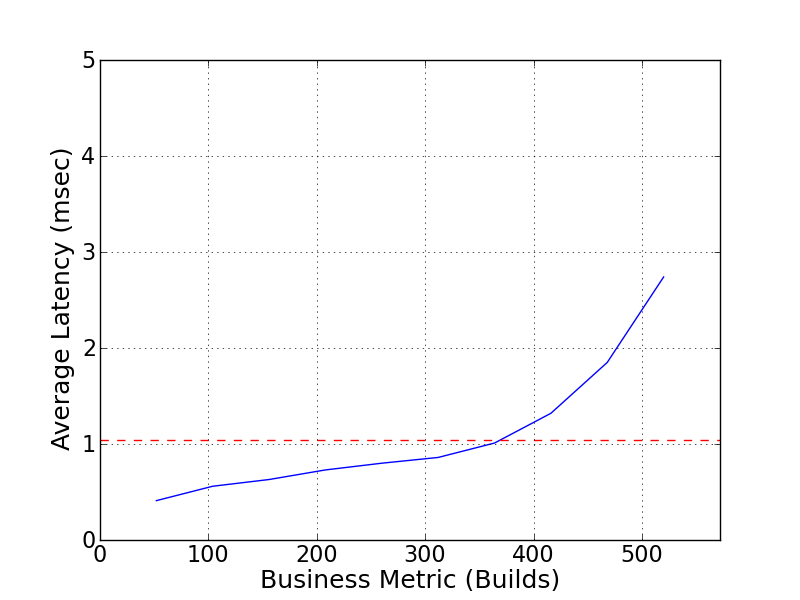

| NetApp, Inc. | SPEC SFS2014_swbuild = 520 Builds |

|---|---|

| NetApp FAS8200 with FlexGroup | Overall Response Time = 1.04 msec |

|

|

| NetApp FAS8200 with FlexGroup | |

|---|---|

| Tested by | NetApp, Inc. | Hardware Available | September 2017 | Software Available | September 2017 | Date Tested | August 2017 | License Number | 33 | Licensee Locations | Sunnyvale, CA USA |

Powered by ONTAP and optimized for scale, the FAS8200 hybrid-flash storage

system enables you to quickly respond to changing needs across flash, disk, and

cloud with industry-leading data management. FAS8200 systems, with integrated

NVMe memory for flash acceleration, are engineered to deliver on core IT

requirements for high performance and scalability as well as uptime, data

protection, and cost-efficiency.

The FlexGroup feature of ONTAP 9

enables you to massively scale in a single namespace to over 20PB with over 400

billion files while evenly spreading the performance across the cluster. This

makes the FAS8200 a great system for engineering and design application as well

as DevOps, especially workloads for chip development and software builds that

are typically high file-count environments with high meta-data traffic.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 2 | Storage Array | NetApp | FAS8200 Hybrid Flash System (HA Pair, Active-Active Dual Controller) | A single NetApp FAS8200 system is a single chassis with 2 controllers. Each set of 2 controllers comprises a High-Availability (HA) Pair. The words "controller" and "node" are used interchangeably in this document. Each FAS8200 HA Pair includes 256GB of ECC memory, 4 PCIe expansion slots and a set of onboard I/O ports: * 8 UTA2 ports configured as 10GbE, used for data (connections to load generators); * 4 10GbE ports, used for cluster interconnect; * 4 10GbE Base-T ports; * 8 12Gb SAS ports, used to connect to disk shelves. Included Premium Bundle which includes All Protocols, SnapRestore, SnapMirror, SnapVault, FlexClone, SnapManager Suite, Single Mailbox Recovery (SMBR), SnapCenter Foundation. Only NFS protocol license is active in the test, also available in the BASE bundle. |

| 2 | 12 | Disk Shelves | NetApp | Disk Shelf DS212C | 12 drive bays in each shelf; 6 shelves per HA Pair |

| 3 | 4 | 12Gb SAS HBA | NetApp | X2069-R6 HBA SAS 3/6/12Gbps QSFP PCIe | Used for connectivity to disk shelves; 2 cards per HA pair; each card has 4 12Gb SAS ports. In the tested configuration, the on-board SAS ports were unused (connected to unused and inactive drive shelves), due to the configuration being part of a shared-infrastructure lab. No benchmark data flowed to or through the unused drive shelves. The PCIe-slot SAS HBA card was used to connect to the disk shelves mentioned above, which were an active part of the tested configuration. |

| 4 | 4 | Network Interface Card | NetApp | X1117A-EN-R6 NIC 2-port bare cage SFP+ 10GbE PCIe Card | Used for cluster interconnect; 2 cards per HA pair; each card has 2 ports |

| 5 | 53 | SFP Transceiver | Cisco | Cisco Avago 10Gbase-SR part SFBR-7702SDZ | Used in both Switches; 21 to clients, 16 to data ports on storage controllers, and 16 to cluster interconnect on storage controllers |

| 6 | 21 | SFP Transceiver | NetApp | 10GbE SFP X6569-R6 | Used in client NIC for data connection to switch |

| 7 | 24 | SFP Transceiver | NetApp | 10GbE SFP X6599A-R6 | Used in Controllers for onboard 10Gb data connections; 8 per HA pair, plus this part was used in 4 onboard Cluster Interconnect ports per HA pair. |

| 8 | 8 | SFP Transceiver | NetApp | SFP X6569-R6 | Used in Controllers for 10GbE NIC card for cluster interconnect; 4 per HA pair |

| 9 | 8 | NVMe Flash Cache | NetApp | Flash Cache X3311A | 1024 GB NVMe PCIe M.2 Flash Cache Modules, 4 per HA pair |

| 10 | 144 | Disk Drives | NetApp | 4TB Disk X336A | 4TB 7200 RPM 12Gb SAS 3.5 inch HDD; 72 disks per HA pair (including 2 spares) |

| 11 | 1 | Switch | Cisco | Cisco Nexus 7018 Switch | Used for 10GbE data connections between clients and storage systems. Large switch in use due to testing having been done in a large shared-infrastructure lab. Only the ports used for this test are listed in this report. |

| 12 | 21 | Client | IBM | IBM 3650m3 | IBM server, each with 24GB main memory. 1 used as Prime Client; 20 used to generate the workload |

| 13 | 1 | Switch | Cisco | Cisco Nexus 5596 | Used for 10GbE cluster interconnections |

| 14 | 5760 | Software Enablement/License | NetApp | OS-ONTAP1-CAP1-PREM-2P | ONTAP Enablement Fee, Per 0.1TB, Capacity-based License |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Linux | OS | Red Hat Enterprise Linux 6.6 for x86_64 | OS for the 21 clients |

| 2 | ONTAP | Storage OS | 9.2 | Storage Operating System |

| 3 | Data Switch | Operating System | 6.2(16) | Cisco switch NX-OS (kickstart and system software) |

| 4 | Cluster Switch | Operating System | 7.3(1) | Cisco switch NX-OS (kickstart and system software) |

| Storage | Parameter Name | Value | Description |

|---|---|---|

| MTU | 9000 | Jumbo Frames configured for Cluster Interconnect ports |

NetApp FAS8200 storage controller 10Gb Ethernet ports used for cluster interconnections (8 per HA pair) are set up with MTU of 9000, Jumbo Frames.

| Clients | Parameter Name | Value | Description |

|---|---|---|

| rsize,wsize | 65536 | NFS mount options for data block size |

| protocol | tcp | NFS mount options for protocol |

| nfsvers | 3 | NFS mount options for NFS version |

| somaxconn | 65536 | Max tcp backlog an application can request |

| tcp_fin_timeout | 5 | TCP time to wait for final packet before socket closed |

| tcp_slot_table_entries | 128 | number of simultaneous TCP Remote Procedure Call (RPC) requests |

| tcp_max_slot_table_entries | 128 | number of simultaneous TCP Remote Procedure Call (RPC) requests |

| udp_slot_table_entries | 128 | number of simultaneous UDP Remote Procedure Call (RPC) requests |

| tcp_rmem | 4096 87380 8388608 | receive buffer size, min, default, max |

| tcp_wmem | 4096 87380 8388608 | send buffer size; min, default, max |

| aio-max-nr | 1048576 | maximum allowed number of events in all active async I/O contexts |

| netdev_max_backlog | 300000 | max number of packets allowed to queue |

Tuned the necessary parameters as shown above, for communication between clients and storage controllers over 10Gb Ethernet, to optimize data transfer and minimize overhead.

None

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | 4TB 7200 RPM Drives used for data; 2x 14+2 RAID-DP RAID groups per storage controller node in the cluster | RAID-DP | Yes | 128 |

| 2 | 4TB 7200 RPM drives used by ONTAP Operating system; 1x 1+2 RAID-DP RAID group per storage controller node in the cluster | RAID-DP | Yes | 12 |

| 3 | 128GB mSATA flash cards, 2 per HA pair; used as boot media and also for de-staging NVMEM data from main memory to flash in event of failure condition | none | Yes | 4 |

| Number of Filesystems | 1 | Total Capacity | 330.4 TiB | Filesystem Type | NetApp FlexGroup |

|---|

Each element (RAID Groups, aggregates) comprising the exported FlexGroup, along with the FlexGroup itself, was created using default values.

The storage configuration consisted of 2 FAS8200 HA pairs (4 FAS8200 controller

nodes total). The two controllers in each HA pair were connected in a SFO

(storage failover) configuration. Together, all 4 controllers (configured as 2

HA Pairs) comprise the tested FAS 8200 HA cluster. Stated in the reverse, the

tested FAS 8200 HA cluster consists of 2 HA Pairs, each of which consists of 2

controllers (also referred to as nodes).

Each storage controller was

connected to its own and partner's disks in a multi-path HA configuration. Each

storage controller was the primary owner of 36 disks, distributed across 6

drive shelves. Each storage controller was configured with 3 RAID groups. One

aggregate was created on each RAID Group. The first two aggregates on each node

held data for the file system. They were configured as 14+2 RAID-DP RAID

groups. The third aggregate, built on a 1+2 RAID-DP RAID Group and referred to

as the "root aggregate", on each controller, held ONTAP operating system

related files. Additionally, each storage controller was allocated 1 spare

disk, for a total of 4 spares disks in the tested FAS8200 HA cluster (note that

the spare drives are not included in the "storage and filesystems" table

because they held no data during the benchmark execution).

A storage

virtual machine or "SVM" was created on the cluster, spanning all storage

controller nodes. Within the SVM, a single FlexGroup volume was created using

the two data aggregates on each controller. A FlexGroup volume is a scale-out

NAS container that provides high performance along with automatic load

distribution and scalability.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 10GbE | 36 | For the client-to-storage network, the FAS8200 Cluster used a total of 16 10GbE connections from storage, communicating with NFSv3 over TCP/IP to 20 clients. The benchmark was conducted in a large shared-infrastructure lab; only the ports shown and documented were used on the Cisco Nexus 7018 switch for this benchmark test. |

| 2 | 10GbE | 16 | The Cluster Interconnect network is connected via 10GbE to a Cisco 5596 switch, with 8 connections to each HA pair. |

Each NetApp FAS8200 HA Pair used 8 10Gb Ethernet ports for data transport connectivity to clients (through the Cisco 7018 switch), Item 1 above. Each of the 20 clients driving workload used one 10Gb Ethernet port for data transport. All ports on the Item 1 network had MTU=1500 (default). The Cluster Interconnect network, Item 2 above, utilized MTU=9000 (Jumbo Frames).

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | Cisco Nexus 7018 | 10Gb Ethernet Switch | 48 | 37 | 21 client-side data connections; 16 storage-side data connections. Only ports on Cisco Nexus 7018 Ethernet Modules needed for solution under test are included in Port Available count. |

| 2 | Cisco Nexus 5596 | 10Gb Ethernet Switch | 96 | 16 | For Cluster Interconnect; MTU=9000 |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 4 | CPU | Storage Controller | 1.70 GHz Intel Xeon D-1587 | NFS, TCP/IP, RAID and Storage Controller functions |

| 2 | 21 | CPU | Client | 3.06 GHz Intel Xeon x5675 | NFS Client, RedHat Linux OS |

Each NetApp FAS8200 Storage Controller contains 1 Intel Xeon D-1587 processor with 16 cores at 1.70 GHz. Each client contains 1 Intel Xeon x5675 processor with 6 cores at 3.06GHz.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| Main Memory for each NetApp FAS8200 HA Pair | 256 | 2 | V | 512 |

| NVMe Flash Cache memory; 4TB per HA Pair | 1024 | 8 | NV | 8192 |

| Memory for each client; 20 of these drove the workload | 24 | 21 | V | 504 | Grand Total Memory Gibibytes | 9208 |

Each storage controller has main memory that is used for the operating system and caching filesystem data. The FlashCache module is a read cache used for caching filesystem data. See "Stable Storage" for more information.

The WAFL filesystem logs writes, and other filesystem data-modifying transactions, to a portion of controller main memory which we call NVMEM. In a storage-failover configuration (HA Pair), as in the system under test, such transactions are also logged (mirrored) to the NVMEM portion of main memory on the partner storage controller so that, in the event of a storage controller failure, any transactions on the failed controller can be completed by the partner controller. Filesystem operations are not acknowledged until after the storage system has confirmed that the related data are stored in the NVMEM portion of memory of both storage controllers (when both controllers are active). In case of power loss, an integrated battery ensures stable storage by providing power to the necessary components of the system long enough for data to be encrypted and securely flushed to an mSATA non-volatile flash device. NVMEM data does not flow over the Cluster Interconnect network. Data for NVMEM mirroring flows over an internal interconnect.

The cluster network was comprised of four 10GbE ports per controller (8 for each HA pair), connected via a Cisco Nexus 5596 switch. This provides high availability for the cluster network in case of port or link failure. Each storage controller had four 10GbE ports connected to the Cisco 7018 data switch; each client had one such 10GbE connection. The data and cluster networks were separate networks. All ports and interfaces on the data network had default size 1500-byte frames configured. All ports on the cluster interconnect network had MTU size 9000 configured. All clients accessed all file-systems from all the available network interfaces.

All standard data protection features, including background RAID and media error scrubbing, software validated RAID checksum, and double disk failure protection via double parity RAID (RAID-DP) were enabled during the test.

Please reference the configuration diagram. 20 clients were used to generate the workload; 1 client acted as Prime Client to control the 20 other clients. Each client has one 10GbE connection, through a Cisco Nexus 7018 switch. Each storage HA pair had 8 10GbE connections to the data switch. The filesystem consisted of one NetApp FlexGroup. The clients mounted the FlexGroup volume as an NFSv3 filesystem. The ONTAP cluster provided access to the FlexGroup volume on every 10GbE port connected to the data switch (16 ports total). Each client created mount points across those 16 ports symmetrically.

None

NetApp is a registered trademark and "Data ONTAP", "FlexGroup", and "WAFL" are trademarks of NetApp, Inc. in the United States and other countries. All other trademarks belong to their respective owners and should be treated as such.

Generated on Wed Mar 13 16:49:38 2019 by SpecReport

Copyright © 2016-2019 Standard Performance Evaluation Corporation