|

|

|---|

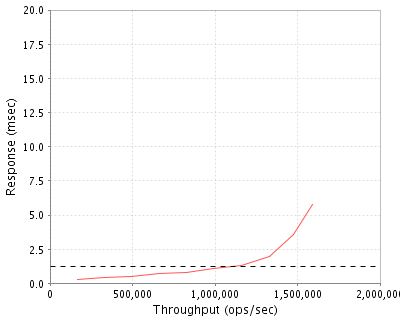

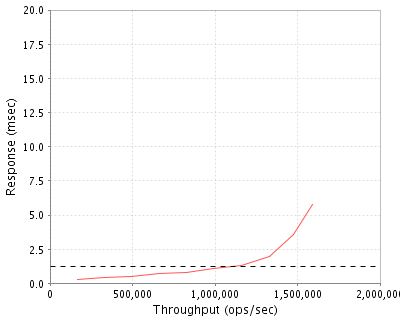

| Avere Systems, Inc. | : | FXT 3800 (32 Node Cluster, Cloud Storage Config) |

| SPECsfs2008_nfs.v3 | = | 1592334 Ops/Sec (Overall Response Time = 1.24 msec) |

|

|

|---|

| Tested By | Avere Systems, Inc. |

|---|---|

| Product Name | FXT 3800 (32 Node Cluster, Cloud Storage Config) |

| Hardware Available | July 2013 |

| Software Available | July 2013 |

| Date Tested | March 18 2013 |

| SFS License Number | 9020 |

| Licensee Locations |

Pittsburgh, PA USA |

The Avere Systems FXT 3800 Edge filer appliance provides Hybrid NAS storage that allows performance to scale independently of capacity. The FXT 3800 is built on a 64-bit architecture that is managed by Avere OS software. FXT Edge filer clusters scale to as many as 50 nodes, support millions of IO operations per second, and deliver over 100 GB/s of bandwidth. The Avere OS Hybrid NAS software dynamically organizes data into RAM, SSD and SAS tiers. Active data is placed on the FXT Edge filer while inactive data is placed on the Core filer that is optimized for capacity. The FXT 3800 accelerates performance of all read, write, and directory/metadata operations, and supports both NFSv3 and CIFS protocols. The AvereOS Local Directories feature enables the Avere Edge filer cluster to immediately acknowledge all filesystem updates and later flush the directory data and user data to the Core filer. The tested configuration consisted of (32) FXT 3800 Edge filer nodes. The system also included (1) OpenSolaris ZFS server in the role of a single Core filer mass storage system exporting a single filesystem. A WAN simulation kernel module was configured into AvereOS to introduce 150 milliseconds of WAN latency (75 milliseconds in each direction) for all communications with the Core filer, thus simulating a cloud storage infrastructure. In this configuration, Avere's AOS presents a single filesystem to all clients and provides instantaneous responses to client operations, despite the 150 millisecond network roundtrip times present with all communications to the Core filer.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 32 | Storage Appliance | Avere Systems, Inc. | FXT 3800 | Avere Systems Hybrid NAS Edge filer appliance running Avere OS V3.0 software. Includes (13) 600 GB SAS Disks and (2) 400GB SSD Drives. |

| 2 | 1 | Server Chassis | Genstor | CSRM-4USM24ASRA | 4U 24-Drive Chassis for OpenSolaris Core filer storage NFS Server. |

| 3 | 1 | Server Motherboard | Genstor | MOBO-SX8DTEF | Supermicro X8DTE Motherboard for OpenSolaris Core filer storage NFS Server. |

| 4 | 2 | CPU | Genstor | CPUI-X5620E | Intel Westmere Quad Core 2.4 GHz 12MB L3 CPU for OpenSolaris Core filer storage NFS Server. |

| 5 | 2 | CPU Heat Sink | Genstor | CPUI-2USMP0038P | SM 2U 3U P0038P Heat Sink for OpenSolaris Core filer storage NFS Server. |

| 6 | 12 | Memory | Arrow | MT36JSZF1G72PZ-1GD1 | Micron DRAM Module DDR3 SDRAM 8GByte 240 RDIMM for OpenSolaris Core filer storage NFS Server. |

| 7 | 1 | Disk Drive | Seagate | ST500NM0011 | Seagate 500GB Constellation ES.3 SATA2 System Disk for OpenSolaris Core filer storage NFS Server. |

| 8 | 69 | Disk Drive | Seagate | ST4000NM0033 | Seagate 4TB Constellation ES.3 SATA2 Disks for OpenSolaris Core filer storage NFS Server. |

| 9 | 1 | RAID Controller | LSI | LSI00209 | LSI 4 internal/4 external port 6Gb/s SATA+SAS RAID card, x8 PCIe 2.0 host interface for OpenSolaris Core filer storage NFS Server. |

| 10 | 2 | JBOD Disk Shelf | Supermicro | 846BE16-R920B | Supermicro 4U 24-Drive JBOD Chassis with SAS2 Expander for OpenSolaris Core filer storage NFS Server. |

| 11 | 2 | JBOD Disk Shelf | Supermicro | CSE-PTJBOD-CB1 | JBOD Storage Power-up Control Board for OpenSolaris Core filer storage NFS Server JBOD Disk Shelf. |

| 12 | 1 | SAS Cabling | Supermicro | CBL-0108L-02 | iPass (mini SAS) to iPass (mini SAS) SAS controller to internal backplane connection for OpenSolaris Core filer storage NFS Server. |

| 13 | 2 | SAS Cabling | Supermicro | CBL-0166L | SAS BP External Cascading Cable for OpenSolaris Core filer storage NFS Server JBOD Disk Shelf. |

| 14 | 3 | SAS Cabling | Supermicro | CBL-0167L | SAS 936EL1 BP 1-Port Internal Cascading Cable for OpenSolaris Core filer storage NFS Server JBOD Disk Shelf. |

| 15 | 1 | Network Card | Intel | E10G42BTDA | Intel 10 Gigabit AF DA Dual Port Server Adapter for OpenSolaris Core filer storage NFS Server. |

| OS Name and Version | Avere OS V3.0 |

|---|---|

| Other Software | Core filer storage server runs OpenSolaris 5.11 svn_134, this package is available for download at http://okcosug.org/osol-dev-134-x86.iso |

| Filesystem Software | Avere OS V3.0 |

| Name | Value | Description |

|---|---|---|

| Writeback Time | 6 hours | Files may be modified up to 6 hours before being written back to the Core filer storage system. |

| buf.autoTune | 0 | Statically size FXT memory caches. |

| buf.neededCleaners | 16 | Allow 16 buffer cleaner tasks to be active. |

| buf.InitialBalance.smallFilePercent | 57 | Tune smallFile buffer to use 57 percent of memory pages. |

| buf.InitialBalance.largeFilePercent | 10 | Tune largeFile buffers to use 10 percent of memory pages. |

| cfs.pagerFillRandomWindowSize | 32 | Increase the size of random IOs from disk. |

| cluster.dirmgrNum | 128 | Create 128 directory manager instances. |

| cluster.dirMgrConnMult | 5 | Multiplex directory manager network connections. |

| dirmgrSettings.unflushedFDLRThresholdToStartFlushing | 5000000 | Increase the directory log size. |

| dirmgrSettings.balanceAlgorithm | 1 | Balance directory manager objects across the cluster. |

| tokenmgrs.geoXYZ.fcrTokenSupported | no | Disable the use of full control read tokens. |

| tokenmgrs.geoXYZ.trackContentionOn | no | Disable token contention detection. |

| tokenmgrs.geoXYZ.lruTokenThreshold | 14000000 | Set a threshold for token recycling. |

| tokenmgrs.geoXYZ.maxTokenThreshold | 14100000 | Set maximum token count. |

| tokenmgrs.geoXYZ.tkPageCleaningStrictOrder | no | Optimize cluster data manager to write pages in parallel. |

| vcm.readdir_readahead_mask | 0x700f | Optimize readdir performance. |

| vcm.vcm_enableVdiskCacheingFsstats | 2 | Cache fsstat information at the vcm vdisk layer. |

| vcm.vcm_fsstatCachePeriod | 21600 | Cache fsstat information for duration specified in seconds. |

| initcfg:cfs.num_inodes | 20000000 | Increase in-memory inode structures. |

| initcfg:vcm.fh_cache_entries | 14000000 | Increase in-memory filehandle cache structures. |

| initcfg:vcm.name_cache_entries | 15000000 | Increase in-memory name cache structures. |

21+2 RAID6 (RAIDZ2) array was created on the OpenSolaris Core filer storage server using the following Solaris commands:

zpool create vol0 raidz2 <drive1-23 4TB from shelf1>

zpool add vol0 raidz2 <drive1-23 4TB from shelf2>

zpool add vol0 raidz2 <drive1-23 4TB from shelf3>

zfs create -o quota=215t -o sharenfs=root=@0.0.0.0/0 vol0/wanboi4

| Description | Number of Disks | Usable Size |

|---|---|---|

| Each FXT 3800 node contains (13) 600 GB 10K RPM SAS disks. All FXT data resides on these disks. | 416 | 227.0 TB |

| Each FXT 3800 node contains (2) 400 GB eMLC SSD disks. All FXT data resides on these disks. | 64 | 11.7 TB |

| Each FXT 3800 node contains (1) 250 GB SATA disk. System disk. | 32 | 7.3 TB |

| The Core filer storage system contains (69) 4 TB SATA disks. The ZFS file system is used to manage these disks and the FXT nodes access them via NFSv3. | 69 | 229.2 TB |

| The Core filer storage system contains (1) 500GB system disk. | 1 | 465.7 GB |

| Total | 582 | 475.6 TB |

| Number of Filesystems | 1 |

|---|---|

| Total Exported Capacity | 223517.4 GB (OpenSolaris Core filer storage system capacity) |

| Filesystem Type | TFS (Tiered File System) |

| Filesystem Creation Options |

Default on FXT nodes. OpenSolaris Core filer storage server ZFS filesystem created with commands: zpool create vol0 raidz2 <drive1-23 4TB from shelf1> zpool add vol0 raidz2 <drive1-23 4TB from shelf2> zpool add vol0 raidz2 <drive1-23 4TB from shelf3> zfs create -o quota=215t -o sharenfs=root=@0.0.0.0/0 vol0/wanboi4 |

| Filesystem Config | 21+2 RAID6 (RAIDZ2) configuration on OpenSolaris Core filer storage server, with 3 RAID sets in the zpool. |

| Fileset Size | 193375.3 GB |

| Item No | Network Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 10 Gigabit Ethernet | 32 | One 10 Gigabit Ethernet port used for each FXT 3800 Edge filer appliance. |

| 2 | 10 Gigabit Ethernet | 1 | The Core filer storage system is connected via 10 Gigabit Ethernet with simulated latency of 150ms (75ms each direction.) |

Each FXT 3800 was attached via a single 10 GbE port to one Gnodal GS7200 72 port 10 GbE switch. The load generating clients were attached to the same switch. The Core filer storage servers were also attached to the same switch. A WAN simulation software module on the FXT 3800 system was configured to inject 150ms (75ms in each direction) of WAN latency between the Avere Edge filer cluster and the Core filer. A 1500 byte MTU was used throughout the network.

An MTU size of 1500 was set for all connections to the switch. Each load generator was connected to the network via a single 10 GbE port. The SUT was configured with 32 separate IP addresses on one subnet. Each cluster node was connected via a 10 GbE NIC and was sponsoring 1 IP address.

| Item No | Qty | Type | Description | Processing Function |

|---|---|---|---|---|

| 1 | 64 | CPU | Intel Xeon CPU E5645 2.40 GHz Hex-Core Processor | FXT 3800 Avere OS, Network, NFS/CIFS, Filesystem, Device Drivers |

| 2 | 2 | CPU | Intel Xeon E5620 2.40 GHz Quad-Core Processor | OpenSolaris Core filer storage systems |

Each file server has two physical processors.

| Description | Size in GB | Number of Instances | Total GB | Nonvolatile |

|---|---|---|---|---|

| FXT 3800 System Memory | 144 | 32 | 4608 | V |

| Core filer storage system memory | 96 | 4 | 384 | V |

| FXT 3800 NVRAM | 2 | 32 | 64 | NV |

| Grand Total Memory Gigabytes | 5056 |

Each FXT node has main memory that is used for the operating system and for caching filesystem data. Each FXT contains two (2) super-capacitor-backed NVRAM modules used to provide stable storage for writes that have not yet been written to disk.

The Avere filesystem logs writes and metadata updates to the PCIe NVRAM modules. Filesystem modifying NFS operations are not acknowledged until the data has been safely stored in NVRAM. In the event of primary power loss to the system under test (SUT,) the charged super-capacitor provides power to the NVRAM circuitry to commit the NVRAM log data to persistent flash memory on the NVRAM card. The NVRAM log data is indefinitely persisted to on-board flash memory. When power is restored to the SUT, the NVRAM log data is restored from the persistent on-board flash to the NVRAM subsystem, and during startup, AvereOS replays this log data to the on-disk filesystem thus assuring a stable storage implementation. The above described implementation handles cascading power failures in the same manner: persist NVRAM to stable flash upon power failure, restore NVRAM log data from flash and replay logs to filesystem upon power-on.

The system under test consisted of (32) Avere FXT 3800 nodes. Each node was attached to the network via 10 Gigabit Ethernet. Each FXT 3800 node contains (13) 600 GB SAS disks and (2) 400GB eMLC SSD drives. The OpenSolaris Core filer storage system was attached to the network via a single 10 Gigabit Ethernet link. The Core filer storage server was a 4U Supermicro server configured with a software RAIDZ 21+2 RAID6 array consisting of (23) 4TB SATA disks. There were (2) additional JBOD shelves, each with (23) drives and SAS connectivity to the Core filer. Each ZFS filesystem consisted of (3) RAIDZ2 sets totaling (69) 4TB SATA disks.

N/A

| Item No | Qty | Vendor | Model/Name | Description |

|---|---|---|---|---|

| 1 | 16 | Supermicro | SYS-1026T-6RFT+ | Supermicro Server with 48GB of RAM running CentOS 6.4 (Linux 2.6.32-358.0.1.el6.x86_64) |

| 2 | 1 | Gnodal | GS7200 | Gnodal 72 Port 10 GbE Switch. 72 SFP/SFP+ ports |

| LG Type Name | LG1 |

|---|---|

| BOM Item # | 1 |

| Processor Name | Intel Xeon E5645 2.40GHz Hex-Core Processor |

| Processor Speed | 2.40 GHz |

| Number of Processors (chips) | 2 |

| Number of Cores/Chip | 6 |

| Memory Size | 48 GB |

| Operating System | CentOS 6.4 (Linux 2.6.32-358.0.1.el6.x86_64) |

| Network Type | Intel Corporation 82599EB 10-Gigabit SFI/SFP+ |

| Network Attached Storage Type | NFS V3 |

|---|---|

| Number of Load Generators | 16 |

| Number of Processes per LG | 512 |

| Biod Max Read Setting | 2 |

| Biod Max Write Setting | 2 |

| Block Size | 0 |

| LG No | LG Type | Network | Target Filesystems | Notes |

|---|---|---|---|---|

| 1..16 | LG1 | 1 | /vol0/wanboi4 | LG1 nodes are all connected to the same single network switch. |

All clients were mounted against the single filesystem on all FXT nodes.

Each load-generating client hosted 512 processes. The assignment of 512 processes to 32 network interfaces was done such that they were evenly divided across all network paths to the FXT appliances. The filesystem data was evenly distributed across all disks and FXT appliances and Core filer storage servers.

N/A

Generated on Mon Apr 08 11:15:18 2013 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 08-Apr-2013