SPECsfs2008_nfs.v3 Result

|

EMC Corporation

|

:

|

Celerra VG8 Server Failover Cluster, 2 Data Movers (1 stdby) / Symmetrix VMAX

|

|

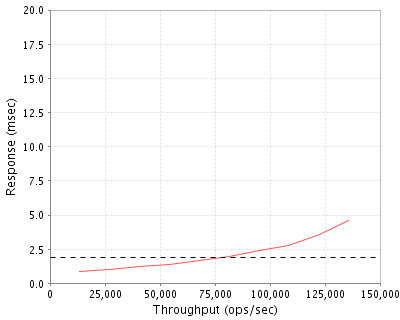

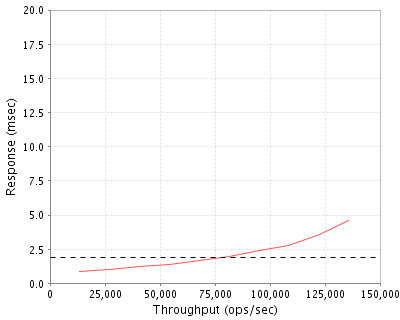

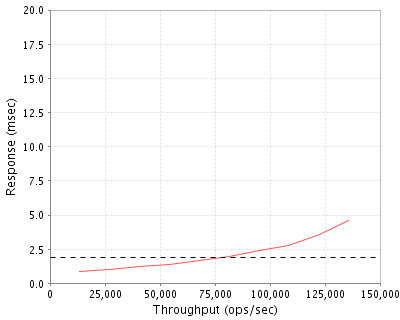

SPECsfs2008_nfs.v3

|

=

|

135521 Ops/Sec (Overall Response Time = 1.92 msec)

|

Performance

Throughput

(ops/sec)

|

Response

(msec)

|

|

13521

|

0.9

|

|

27056

|

1.0

|

|

40730

|

1.2

|

|

54289

|

1.4

|

|

67857

|

1.7

|

|

81592

|

2.0

|

|

95206

|

2.4

|

|

108604

|

2.8

|

|

122448

|

3.6

|

|

135521

|

4.6

|

|

|

Product and Test Information

|

Tested By

|

EMC Corporation

|

|

Product Name

|

Celerra VG8 Server Failover Cluster, 2 Data Movers (1 stdby) / Symmetrix VMAX

|

|

Hardware Available

|

August 2010

|

|

Software Available

|

August 2010

|

|

Date Tested

|

July 2010

|

|

SFS License Number

|

47

|

|

Licensee Locations

|

Hopkinton, MA

|

The Celerra VG8 Gateway Server system is a consolidation of file-based servers with NAS (NFS, CIFS) applications configured in an 1+1 High-Availability cluster. The servers deliver network services over high-speed Gigabit Ethernet. The cluster tested here consists of one active Data Mover that provides 8 Jumbo-frame capable Gigabit Ethernet interfaces and one stand-by Data Mover to provide high availability. The Celerra VG8 is a gateway to Shared Storage Array Symmetrix VMAX.

The Symmetrix VMAX is a storage architecture using 4 VMAX engines interconnected via a set of multiple active 4 Gbit/s FC HBAs connecting to the fabric.

Configuration Bill of Materials

|

Item No

|

Qty

|

Type

|

Vendor

|

Model/Name

|

Description

|

|

1

|

1

|

Enclosure

|

EMC

|

VG8-DME0

|

Celerra VG8 empty Data Mover add on enclosure

|

|

2

|

1

|

Enclosure

|

EMC

|

VG8-DME1

|

Celerra VG8 empty Data Mover add on enclosure

|

|

3

|

2

|

Data Mover

|

EMC

|

VG8-DM-8A

|

Celerra VG8 Data Mover, 8 GbE ports, 4 FC ports

|

|

4

|

1

|

Control station

|

EMC

|

VG8-CSB

|

Celerra VG8 control station (Administration only)

|

|

5

|

2

|

Software

|

EMC

|

VG8-UNIX-L

|

Celerra VG8 UNIX License

|

|

6

|

1

|

Intelligent Storage Array Engine

|

EMC

|

SB-64-BASE

|

Symmetrix VMAX Base Engine, 64 GB Cache

|

|

7

|

3

|

Intelligent Storage Array Engine

|

EMC

|

SB-ADD64NDE

|

Symmetrix VMAX Add Engine, 64 GB Cache

|

|

8

|

16

|

FE IO Module

|

EMC

|

SB-FE80000

|

Symmetrix VMAX Front End IO Module with multimode SFP's

|

|

9

|

32

|

Drive Enclosure

|

EMC

|

SB-DE15-DIR

|

Symmetrix VMAX Direct Connect Storage Bay, Drive Enclosure

|

|

10

|

304

|

FC Disk

|

SEAGATE

|

NS4154501B

|

Symmetrix VMAX Cheetah 450 GB 15K.6 4 Gbit/s FC Disks

|

|

11

|

8

|

FC Disk

|

SEAGATE

|

NS4154501B

|

Symmetrix VMAX Cheetah 450 GB 15K.6 4 Gbit/s FC Disks

|

|

12

|

4

|

Standby Power Supply

|

EMC

|

SB-DB-SPS

|

VMAX Standby Power Supply

|

|

13

|

1

|

FC Switch

|

EMC

|

EMC DS-5100B

|

40 port Fibre Channel Switch

|

Server Software

|

OS Name and Version

|

DART 6.0.36.2

|

|

Other Software

|

EMC Celerra Control Station Linux 2.6.18-128.1.1.6005.EMC

|

|

Filesystem Software

|

Celerra UxFS File System

|

Server Tuning

|

Name

|

Value

|

Description

|

|

ufs syncInterval

|

22500

|

Timeout between UFS log flushes

|

|

file asyncThresholdPercentage

|

80

|

Total cached dirty blocks for NFSv3 async writes

|

|

ufs cgHighWaterMark

|

131071

|

Defines the system's high water mark for the number of CG entries in CG cache

|

|

ufs inoBlkHashSize

|

170669

|

Inode block hash size

|

|

kernel autoreboot

|

600

|

Delay until autoreboot

|

|

ufs updateAccTime

|

0

|

Disable access time updates

|

|

ufs nFlushDir

|

64

|

Number of UxFS directory and indirect blocks flush threads

|

|

file prefetch

|

0

|

Disable DART read pre-fetch

|

|

ufs inoHighWaterMark

|

65536

|

Number of dirty inode buffers per filesystem

|

|

nfs thrdToStream

|

16

|

Number of NFS flush threads per stream

|

|

ufs inoHashTableSize

|

1000003

|

Inode hash table size

|

|

kernel maxStrToBeProc

|

32

|

Number of network streams to process at once

|

|

ufs nFlushIno

|

96

|

Number of UxFS inode blocks flush threads

|

|

ufs nFlushCyl

|

64

|

Number of UxFS cylinder group blocks flush threads

|

|

nfs nthreads

|

6

|

Number of threads dedicated to serve nfs requests

|

|

nfs withoutCollector

|

1

|

Enable NFS-to-CPU thread affinity

|

|

file dnlcNents

|

12500000

|

Directory name lookup cache size

|

|

nfs ofCachesize

|

1080000

|

NFS openfile cache size

|

|

file cachedNodes

|

1536000

|

filesystem vnodes cache size

|

|

tcp backlog

|

128

|

Connection limit for a given port

|

|

nfs start nfsd

|

4

|

Number of NFS daemons

|

Server Tuning Notes

Disks and Filesystems

|

Description

|

Number of Disks

|

Usable Size

|

|

This set of 304 FC disks is divided into 152 2-disk RAID1 pairs, each with 4 LUs per drive, exported as 304 logical volumes. All data file systems reside on these disks.

|

304

|

18.8 TB

|

|

This set of FC disks consists of 4 2-disk RAID1 pairs, each with 2 LUs per drive, exported as 8 logical volumes. These disks are reserved for Celerra system use.

|

8

|

900.0 GB

|

|

Total

|

312

|

19.7 TB

|

|

Number of Filesystems

|

4

|

|

Total Exported Capacity

|

19234 GB

|

|

Filesystem Type

|

UxFS

|

|

Filesystem Creation Options

|

Default

|

|

Filesystem Config

|

Each filesystem is striped (32 KB element size), across 76 disks (304 logical volumes) for fs1 fs2 fs3 and fs4

|

|

Fileset Size

|

15806.5 GB

|

The drives were configured as 152 RAID1 pairs. 38 RAID1 pairs were assigned to each Symmetrix VMAX engine. Each RAID1 pair had 6 LUNS created on it, of which only 1 LUN was used per RAID1 pair. 4 stripes were created, one per 38 RAID1 pair group with a stripe element of 32K. 4 file systems were created, 1 per stripe. A Symmetrix VMAX engine provided access to the drives of 1 file system. All 4 file systems were mounted on the Celerra Data Mover. All 4 file systems were exported by the Celerra Data Mover. Each client mounted all 4 file systems through each network interface of the Celerra.

Network Configuration

|

Item No

|

Network Type

|

Number of Ports Used

|

Notes

|

|

1

|

Jumbo Gigabit Ethernet

|

8

|

This is the Gigabit network interface used for the active Data Mover.

|

Network Configuration Notes

All Gigabit network interfaces were connected to a Cisco 6509 switch.

Benchmark Network

An MTU size of 9000 was set for all connections to the switch. Each Data Mover was connected to the network via 8 ports. The LG1 class workload machines were connected with one port.

Processing Elements

|

Item No

|

Qty

|

Type

|

Description

|

Processing Function

|

|

1

|

1

|

CPU

|

Single socket Intel Six Core Westmere 2.8 GHz VG8 with QPI speed 6400 MHz for each Data Mover server. 1 chip active for the workload. (1 standby not in the quantity)

|

NFS protocol, UxFS filesystem

|

Processing Element Notes

Each Data Mover has one physical processor.

Memory

|

Description

|

Size in GB

|

Number of Instances

|

Total GB

|

Nonvolatile

|

|

Each Data Mover main memory. (24 GB in the standby Data Mover not in the quantity)

|

24

|

1

|

24

|

V

|

|

Symmetrix VMAX storage array battery backed global memory. (64 GB per Symmetrix VMAX engine)

|

64

|

4

|

256

|

NV

|

|

Grand Total Memory Gigabytes

|

|

|

280

|

|

Memory Notes

The Symmetrix VMAX was configured with a total of 256 GB of memory. The memory is backed up with sufficient battery power to safely destage all the cached data onto the disk in the event of a power failure.

Stable Storage

4 NFS file systems were used. Each RAID1 pair had 4 LUs bound on it. Each file system was striped over a quarter of the logical volumes. The storage array had 8 Fibre Channel connections, 4 per Data Mover. In this configuration, NFS stable write and commit operations are not acknowledged until after the storage array has acknowledged that the related data has been stored in stable storage (i.e. NVRAM or disk).

System Under Test Configuration Notes

The system under test consisted of one Celerra VG8 Gateway Data Mover attached to a Symmetrix VMAX Storage Array with 8 FC links. The Data Movers were running DART 6.0.36.2. 8 GbE Ethernet ports on the Data Mover were connected to the network.

Other System Notes

Failover is supported by an additional Celerra Data Mover that operates in stand-by mode. In the event of the Data Mover failure, the standby unit takes over the function of the failed unit. The stand-by Data Mover does not contribute to the performance of the system and it is not included in the components listed above.

Test Environment Bill of Materials

|

Item No

|

Qty

|

Vendor

|

Model/Name

|

Description

|

|

1

|

18

|

Dell

|

Dell PowerEdge 1850

|

Dell server with 1 GB RAM and the Linux 2.6.9-42.ELsmp operating system

|

Load Generators

|

LG Type Name

|

LG1

|

|

BOM Item #

|

1

|

|

Processor Name

|

Intel(R) Xeon(TM) CPU 3.60GHz

|

|

Processor Speed

|

3.6 GHz

|

|

Number of Processors (chips)

|

2

|

|

Number of Cores/Chip

|

2

|

|

Memory Size

|

1 GB

|

|

Operating System

|

Linux 2.6.9-42.ELsmp

|

|

Network Type

|

1 x Broadcom BCM5704 NetXtreme Gigabit Ethernet

|

Load Generator (LG) Configuration

Benchmark Parameters

|

Network Attached Storage Type

|

NFS V3

|

|

Number of Load Generators

|

18

|

|

Number of Processes per LG

|

32

|

|

Biod Max Read Setting

|

5

|

|

Biod Max Write Setting

|

5

|

|

Block Size

|

AUTO

|

Testbed Configuration

|

LG No

|

LG Type

|

Network

|

Target Filesystems

|

Notes

|

|

1..18

|

LG1

|

1

|

/fs1,/fs2,/fs3,/fs4

|

N/A

|

Load Generator Configuration Notes

All filesystems were mounted on all clients, which were connected to the same physical and logical network.

Uniform Access Rule Compliance

Each client has the same file systems mounted from the active Data Mover.

Other Notes

Failover is supported by an additional Celerra Data Mover that operates in stand-by mode. In the event of the Data Mover failure, the standby unit takes over the function of the failed unit. The stand-by Data Mover does not contribute to the performance of the system and it is not included in the components listed above.

The Symmetrix VMAX was configured with 256 GB of memory, 64 GB per VMAX engine. The memory is backed up with sufficient battery power to safely destage all cached data onto the disk in the event of a power failure.

Config Diagrams

Generated on Wed Aug 25 11:57:22 2010 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 25-Aug-2010