|

|

|---|

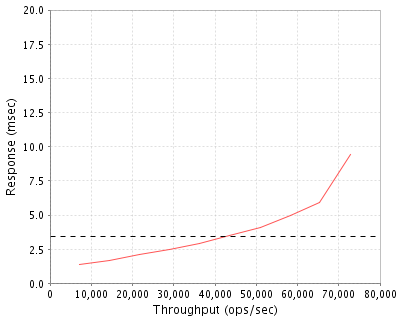

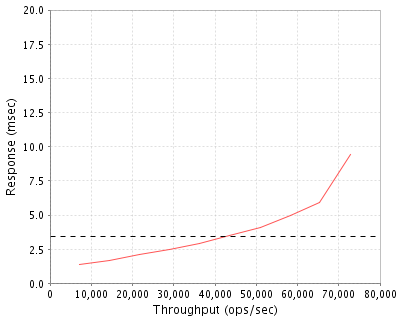

| BlueArc Corporation | : | BlueArc Mercury 100, Single Server |

| SPECsfs2008_nfs.v3 | = | 72921 Ops/Sec (Overall Response Time = 3.39 msec) |

|

|

|---|

| Tested By | BlueArc Corporation |

|---|---|

| Product Name | BlueArc Mercury 100, Single Server |

| Hardware Available | July 2009 |

| Software Available | October 2009 |

| Date Tested | October 2009 |

| SFS License Number | 63 |

| Licensee Locations | San Jose, CA, USA |

The BlueArc Mercury Server is a next generation network storage platform that consolidates multiple applications and simplifies storage management for businesses with mid-range storage requirements. The Mercury platform leverages a hybrid-core architecture that employs both field programmable gate arrays (FPGAs) and traditional multi-core processors to deliver enhanced performance across a variety of application environments, including general purpose file systems, database, messaging and online fixed content.

The Mercury server series features 2 models: the Mercury 50 and the Mercury 100, which can scale to 1PB or 2PB of usable data storage respectively, supporting a range of SAS, FC, Nearline SAS and SATA drive technologies. Both servers support simultaneous 1GbE and 10GbE LAN speed access, and 4Gbps FC storage connectivity.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 1 | Server | BlueArc | SX345250 | BlueArc Mercury Server, Model 100 |

| 2 | 6 | Disk Controller | BlueArc | SX345263 | RC12C Storage Controller |

| 3 | 6 | Disk Expansion | BlueArc | SX345264 | RC12Exp Storage Expansion |

| 4 | 12 | Disk Drive | BlueArc | SX345265 | RC12 Disk 300GB SAS 15K Drive (12 Pk) |

| 5 | 2 | FC Switch | BlueArc | SX345120 | FC Switch, 4Gbps 16-port w/SFPs |

| 6 | 1 | Server | BlueArc | SX345268 | System Management Unit, Model 200 |

| 7 | 1 | Software | BlueArc | SX435065 | Software License (Tier A) - Unix (NFS) |

| OS Name and Version | SU 6.5.1848 |

|---|---|

| Other Software | None |

| Filesystem Software | SiliconFS 6.5.1848 |

| Name | Value | Description |

|---|---|---|

| cifs_auth | off | Disable CIFS security authorization |

| fsm cache-bias | small-files | Set metadata cache bias to small files |

| fs-accessed-time | off | Accessed time management was turned off |

| shortname | off | Disable shortname generation for CIFS clients |

| fsm read-ahead | 0 | Disable file read-ahead |

| security-mode | UNIX | Security mode is native UNIX |

N/A

| Description | Number of Disks | Usable Size |

|---|---|---|

| 300GB SAS 15K RPM Disk Drives (Seagate ST3450856SS) | 144 | 29.4 TB |

| 160GB SATA 5400 RPM Disk Drives (Hitachi HTE543216L9A300) used for storing the core operating system and management logs. No cache or data storage. | 2 | 160.0 GB |

| Total | 146 | 29.6 TB |

| Number of Filesystems | 1 |

|---|---|

| Total Exported Capacity | 20TB |

| Filesystem Type | WFS-2 |

| Filesystem Creation Options |

4K filesystem block size dsb-count (dynamic system block) set at 768 |

| Filesystem Config | A single FS spanning 18 LUNs of 6+2 RAID 6 |

| Fileset Size | 8485.4 GB |

The storage configuration consisted of 6 RC12 RAID arrays, each with a single RC12C controller and a single RC12E expansion shelf for a total of 12 shelves of storage; each shelf with 12 disk drives. Each array was configured with three 6+2 RAID 6 LUNs.

The controller shelf has two 4Gbps FC H-ports connected to the server via a redundant pair of Brocade 200E switches. The Mercury server is connected to each Brocade 200E via a 4 Gbps FC connection, such that a completely redundant path exists from server to storage. The expansion shelves connect to the controller by dual 6Gb/s SAS connections.

Mercury servers have 2 internal mirrored hard disk drives per server which are used to store the core operating software and system logs. These drives are not used for cache space or for storing data.

| Item No | Network Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 10 Gigabit Ethernet | 1 | Integrated 1GbE / 10GbE Ethernet controller |

The network interface was connected to a Fujitsu XG2000 switch, which provided connectivity to the clients. The interfaces were configured to use jumbo frames (MTU size of 9000 bytes).

Each LG has a Myricom 10GbE single port PCIe network interface. Each LG connects via a single 10GbE connection to ports on the Fujitsu XG2000.

| Item No | Qty | Type | Description | Processing Function |

|---|---|---|---|---|

| 1 | 2 | FPGA | Altera Stratix III EP3SE260 | Storage Interface, Filesystem |

| 2 | 2 | FPGA | Altera Stratix III EP3SL340 | Network Interface, NFS, Filesystem |

| 3 | 1 | CPU | Intel E8400 3.0GHz, Dual Core | Management |

| 4 | 12 | CPU | N/A | HW RAID |

Each RAID Controller shelf has dual controllers with 1 CPU each (2 total). There are 6 arrays used in this configuration.

| Description | Size in GB | Number of Instances | Total GB | Nonvolatile |

|---|---|---|---|---|

| Server Main Memory | 16 | 1 | 16 | V |

| Server Filesystem and Storage Cache | 14 | 1 | 14 | V |

| Server Battery-backed NVRAM | 2 | 1 | 2 | NV |

| RAID Cache | 1 | 6 | 6 | V |

| Grand Total Memory Gigabytes | 38 |

The server has 16GB of main memory that is used for the operating system and in support of the FPGA functions. 14GB of memory is dedicated to filesystem metadata and sector caches. A separate, integrated battery-backed NVRAM module on the filesystem board is used to provide stable storage for writes that have not yet been written to disk. .

The RAID Controllers were configured in write-thru mode (write cache disabled) with read-ahead disabled.

The Mercury server writes first to the battery based (72 hours) NVRAM internal to the Mercury. Data from NVRAM is then written to the disk drives arrays at the earliest opportunity, but always within a few seconds of arrival in NVRAM.

The system under test consisted of a single 3U Mercury 100 server, connected to storage via 2 16-port 4Gbps FC switches. The storage consisted of 6 arrays of 24 drives each, with a RAID controller shelf and disk expansion shelf in each array. All connectivity from server to storage was via a 4Gbps switched FC fabric. For the purposes of the test, each FC switch was zoned into 2 zones, for a total of 4 distinct zones used in the test. The Mercury 100 server connected to each zone via 4 integrated 4Gbps FC ports (corresponding to 4 H-ports) built into the server. Each storage array was connected to 2 zones (corresponding to 2 H-ports) providing a fully redundant path from server to storage. Expansion shelves were connected to the controllers via dual SAS interconnects.

The System Management Unit (SMU) is part of the total system solution, but is used for management purposes only. It is not active during the test.

| Item No | Qty | Vendor | Model/Name | Description |

|---|---|---|---|---|

| 1 | 12 | Unbranded | N/A | Unbranded 1U Solaris client with Tyan n6650W dual Opteron motherboard, 4GB RAM |

| 2 | 1 | Fujitsu | XG2000 | Fujitsu XG2000 20-port 10GbE Switch |

| LG Type Name | LG1 |

|---|---|

| BOM Item # | 1 |

| Processor Name | AMD Opteron 2218 |

| Processor Speed | 2.6 Ghz |

| Number of Processors (chips) | 2 |

| Number of Cores/Chip | 2 |

| Memory Size | 8 GB |

| Operating System | Solaris 10 |

| Network Type | 1 x Myricom (10G-PCIE-8A-R+E) 10Gbit Single Port PCIe |

| Network Attached Storage Type | NFS V3 |

|---|---|

| Number of Load Generators | 12 |

| Number of Processes per LG | 56 |

| Biod Max Read Setting | 2 |

| Biod Max Write Setting | 2 |

| Block Size | AUTO |

| LG No | LG Type | Network | Target Filesystems | Notes |

|---|---|---|---|---|

| 1..12 | LG1 | 1 | /f1 | N/A |

All clients were connected to one common filesystem on the server, connected to a single 10GbE network.

Each load generating client hosted 56 processes, all accessing a single filesystem on the Mercury server through a single common network connection. The filesystem was uniformly striped across the available storage arrays such that all clients uniformly accessed all arrays.

None

BlueArc and SilconFS are registered trademarks, and BlueArc Mercury is a trademark of BlueArc Corporation in the United States and other countries. All other trademarks belong to their respective owners and should be treated as such.

Generated on Tue Nov 10 08:02:03 2009 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 10-Nov-2009