SPECsfs2008_nfs.v3 Result

|

Avere Systems, Inc.

|

:

|

FXT 2500 (2 Node Cluster)

|

|

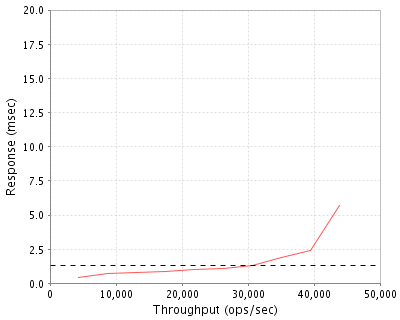

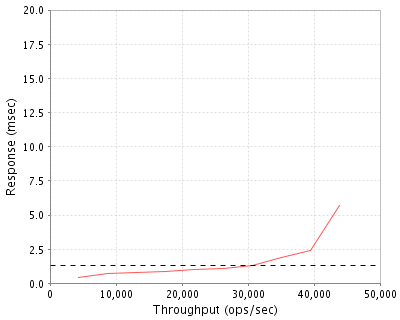

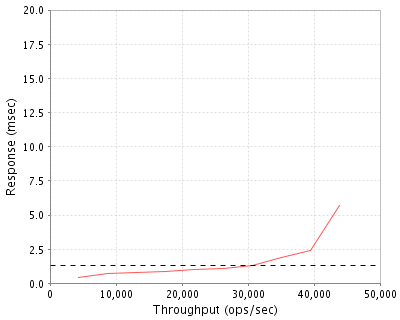

SPECsfs2008_nfs.v3

|

=

|

43796 Ops/Sec (Overall Response Time = 1.33 msec)

|

Performance

Throughput

(ops/sec)

|

Response

(msec)

|

|

4358

|

0.4

|

|

8727

|

0.7

|

|

13099

|

0.8

|

|

17473

|

0.9

|

|

21864

|

1.0

|

|

26234

|

1.1

|

|

30646

|

1.3

|

|

35006

|

1.9

|

|

39469

|

2.4

|

|

43796

|

5.7

|

|

|

Product and Test Information

|

Tested By

|

Avere Systems, Inc.

|

|

Product Name

|

FXT 2500 (2 Node Cluster)

|

|

Hardware Available

|

October 2009

|

|

Software Available

|

October 2009

|

|

Date Tested

|

September 2009

|

|

SFS License Number

|

9020

|

|

Licensee Locations

|

Pittsburgh, PA

USA

|

The Avere Systems FXT 2500 appliance provides tiered NAS storage that allows performance to scale independently of capacity. The FXT 2500 is built on a 64-bit architecture that is managed by Avere OS software. FXT clusters scale to as many as 25 nodes and support millions of IO operations per second and deliver multiple GB/s of bandwidth. The Avere OS software dynamically organizes data into tiers. Active data is placed on the FXT appliances while inactive data is placed on slower mass storage servers. The system accelerates performance of read, write and metadata operations. The FXT 2500 supports both NFSv3 and CIFS protocols. The tested configuration consisted of (2) FXT 2500 cluster nodes. The system also included a Linux server that acted as the mass storage system.

Configuration Bill of Materials

|

Item No

|

Qty

|

Type

|

Vendor

|

Model/Name

|

Description

|

|

1

|

2

|

Storage Appliance

|

Avere Systems, Inc.

|

FXT 2500

|

Avere Systems Tiered NAS Appliance running Avere OS 1.0 software. Includes (8) 450 GB SAS Disks.

|

|

2

|

1

|

Server Motherboard

|

Supermicro

|

X7DWN+

|

Motherboard for Linux mass storage NFS Server.

|

|

3

|

1

|

Server Chassis

|

Supermicro

|

CSE-846E1-R900B

|

4U 24-Drive Chassis for Linux mass storage NFS Server.

|

|

4

|

7

|

Disk Drive

|

Seagate

|

ST31000340NS

|

1TB Barracuda ES.2 SATA 7200 RPM Disks for Linux mass storage NFS Server.

|

|

5

|

1

|

System Disk

|

Seagate

|

ST3500320NS

|

500GB Barracuda ES.2 SATA 7200 RPM Disk for Linux mass storage NFS Server.

|

|

6

|

1

|

RAID Controller

|

LSI

|

SAS8888ELP

|

SAS/SATA RAID Controller with 512 MB Cache and External Battery Backup Unit for Linux mass storage NFS Server.

|

Server Software

|

OS Name and Version

|

Avere OS 1.0

|

|

Other Software

|

Mass storage server runs Ubuntu 9.04 with Linux 2.6.28-11-server kernel

|

|

Filesystem Software

|

Avere OS 1.0

|

Server Tuning

|

Name

|

Value

|

Description

|

|

Slider Age

|

8 hours

|

Files may be modified up to 8 hours before being written back to the mass storage system.

|

Server Tuning Notes

6+1 RAID5 array was created on the Linux mass storage server using the following LSI MegaCli command line 'MegaCli64 -CfgLdAdd -r5 '[72:0,72:1,72:2,72:3,72:4,72:5,72:6]' WB ADRA Cached NoCachedBadBBU -strpsz32 -a0' and disk caches were disabled as follows 'MegaCli64 -LDSetProp -DisDskCache -L0 -a0.' This MegaCli command line creates a RAID5 6+1 array with write-back caching enabled in the controller (WB), adaptive read-ahead (ADRA), controller caching (Cached), caching disabled if the battery-backup unit fails (NoCachedBadBBU), a 32KB stripe unit size on controller zero (-a0). Complete documentation for the MegaCli command line tool is available at http://www.lsi.com/DistributionSystem/AssetDocument/80-00156-01_RevH_SAS_SW_UG.pdf.

Disks and Filesystems

|

Description

|

Number of Disks

|

Usable Size

|

|

Each FXT 2500 node contains (8) 450 GB 15K RPM SAS disks. All FXT data resides on these disks.

|

16

|

6.2 TB

|

|

Each FXT 2500 node contains (1) 250 GB SATA disk. System disk.

|

2

|

500.0 GB

|

|

The mass storage system contains (7) 1 TB SATA disks. The XFS file system is used to manage these disks and the FXT nodes access them via NFSv3.

|

7

|

5.5 TB

|

|

The mass storage system contains (1) 500 GB SATA disk. System disk.

|

1

|

500.0 GB

|

|

Total

|

26

|

12.6 TB

|

|

Number of Filesystems

|

1

|

|

Total Exported Capacity

|

5582 GB (Linux mass storage system capacity)

|

|

Filesystem Type

|

TFS (Tiered File System)

|

|

Filesystem Creation Options

|

Default on FXT nodes. Linux mass storage server XFS filesystem created with 'mkfs.xfs -d agsize=11706990592,sunit=64,swidth=384 -l size=128m,version=2,sunit=64,lazy-count=1 -i align=1 -f /dev/sdb'. Mounted using 'mount -t xfs -o logbufs=8,logbsize=262144,allocsize=4096,nobarrier /dev/sdb /export1'

|

|

Filesystem Config

|

6+1 RAID5 configuration on Linux mass storage server.

|

|

Fileset Size

|

5106.9 GB

|

Network Configuration

|

Item No

|

Network Type

|

Number of Ports Used

|

Notes

|

|

1

|

10 Gigabit Ethernet

|

2

|

One 10 Gigabit Ethernet port used for each FXT 2500 appliance.

|

|

2

|

Gigabit Ethernet

|

1

|

The mass storage system is connected via a 1 Gigabit Ethernet.

|

Network Configuration Notes

Each FXT 2500 was attached via a single 10 GbE port to an Arista DCS-7124S 24 port 10 GbE switch. The load generating clients were attached to an HP ProCurve 2900-48G switch via a single 1 GbE link. The HP switch had a 10 GbE uplink to the Arista switch. The mass storage server was attached to the HP switch via a single 1 GbE link. A 1500 byte MTU was used throughout the network.

Benchmark Network

An MTU size of 1500 was set for all connections to the switch. Each load generator was connected to the network via a single 1 GbE port. The SUT was configured with 2 separate IP addresses on one subnet. Each cluster node was connected via a 10 GbE NIC and was sponsoring 1 IP address.

Processing Elements

|

Item No

|

Qty

|

Type

|

Description

|

Processing Function

|

|

1

|

4

|

CPU

|

Intel Xeon E5420 2.50 GHz Quad-Core Processor

|

FXT 2500 Avere OS, Network, NFS/CIFS, Filesystem, Device Drivers

|

|

2

|

2

|

CPU

|

Intel Xeon E5420 2.50 GHz Quad-Core Processor

|

Linux mass storage system

|

Processing Element Notes

Each file server has two physical processors.

Memory

|

Description

|

Size in GB

|

Number of Instances

|

Total GB

|

Nonvolatile

|

|

FXT System Memory

|

64

|

2

|

128

|

V

|

|

Mass storage system memory

|

32

|

1

|

32

|

V

|

|

FXT NVRAM module

|

1

|

2

|

2

|

NV

|

|

Mass storage system RAID Controller NVRAM Module

|

.5

|

1

|

0.5

|

NV

|

|

Grand Total Memory Gigabytes

|

|

|

162.5

|

|

Memory Notes

Each FXT node has main memory that is used for the operating system and for caching filesystem data. A separate, battery-backed NVRAM module is used to provide stable storage for writes that have not yet been written to disk.

Stable Storage

The Avere filesystem logs writes and metadata updates to the NVRAM module. Filesystem modifying NFS operations are not acknowledged until the data has been safely stored in NVRAM. The battery backing the NVRAM ensures that any uncommitted transactions persist for at least 72 hours.

System Under Test Configuration Notes

The system under test consisted of (2) Avere FXT 2500 nodes. Each node was attached to the network via 10 Gigabit Ethernet. Each FXT 2500 node contains (8) 450 GB SAS disks. The Linux mass storage system was attached to the network via a single 1 Gigabit Ethernet link. The mass storage server was a 4U Supermicro server configured with an (7) 1TB disk SATA RAID5 array.

Other System Notes

Test Environment Bill of Materials

|

Item No

|

Qty

|

Vendor

|

Model/Name

|

Description

|

|

1

|

6

|

Supermicro

|

X7DWU

|

Supermicro Server with 8GB of RAM

|

|

2

|

1

|

Arista Networks

|

DCS-7124S

|

Arista 24 Port 10 GbE Switch

|

|

3

|

1

|

HP

|

2900-48G

|

HP ProCurve 48 Port 1 GbE Switch

|

Load Generators

|

LG Type Name

|

LG1

|

|

BOM Item #

|

1

|

|

Processor Name

|

Intel Xeon E5420 2.50 GHz Quad-Core Processor

|

|

Processor Speed

|

2.50 GHz

|

|

Number of Processors (chips)

|

2

|

|

Number of Cores/Chip

|

4

|

|

Memory Size

|

8 GB

|

|

Operating System

|

CentOS 5.3 (Linux 2.6.18-128.el5)

|

|

Network Type

|

1 x Intel Gigabit Ethernet Controller 82575EB

|

Load Generator (LG) Configuration

Benchmark Parameters

|

Network Attached Storage Type

|

NFS V3

|

|

Number of Load Generators

|

6

|

|

Number of Processes per LG

|

48

|

|

Biod Max Read Setting

|

2

|

|

Biod Max Write Setting

|

2

|

|

Block Size

|

0

|

Testbed Configuration

|

LG No

|

LG Type

|

Network

|

Target Filesystems

|

Notes

|

|

1..6

|

LG1

|

1

|

/export1/3

|

N/A

|

Load Generator Configuration Notes

All clients were connected to a single filesystem through both FXT nodes

Uniform Access Rule Compliance

Each load-generating client hosted 48 processes. The assignment of processes to network interfaces was done such that they were evenly divided across all network paths to the FXT appliances. The filesystem data was evenly distributed across all disks and FXT appliances.

Other Notes

Config Diagrams

Generated on Fri Oct 09 08:51:55 2009 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 09-Oct-2009