SPECsfs2008_nfs.v3 Result

|

Isilon Systems

|

:

|

IQ5400S

|

|

SPECsfs2008_nfs.v3

|

=

|

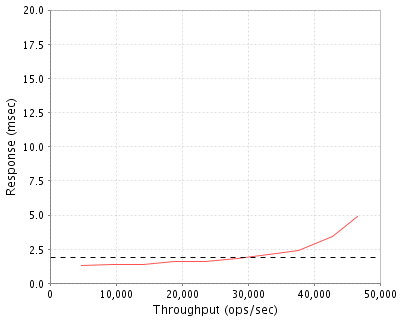

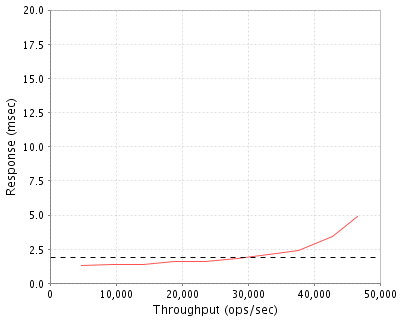

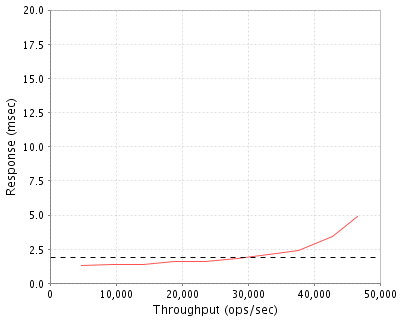

46635 Ops/Sec (Overall Response Time = 1.91 msec)

|

Performance

Throughput

(ops/sec)

|

Response

(msec)

|

|

4704

|

1.3

|

|

9415

|

1.4

|

|

14142

|

1.4

|

|

18876

|

1.6

|

|

23595

|

1.6

|

|

28366

|

1.8

|

|

33075

|

2.1

|

|

37678

|

2.4

|

|

42800

|

3.4

|

|

46635

|

4.9

|

|

|

Product and Test Information

|

Tested By

|

Isilon Systems

|

|

Product Name

|

IQ5400S

|

|

Hardware Available

|

March 2009

|

|

Software Available

|

March 2009

|

|

Date Tested

|

April 2009

|

|

SFS License Number

|

2723

|

|

Licensee Locations

|

Seattle, WA, USA

|

The Isilon IQ5400S, built on Isilon's proven clustered storage technology-accelerates business and increases speed-to-market by providing ultra-fast primary storage for your mission critical, high transactional, and random access file-based applications. The S-Series combines the power of enterprise-class 15,000 RPM Serial Attached SCSI (SAS) drive technology, Quad-GbE front-end networking, dual quad-core Intel CPUs, a high-performance InfiniBand back-end, and up to 1.5 TB of globally coherent cache. The IQ5400S Storage System can scale out from a 3 node cluster to a 96 node cluster in a single filesystem.

Configuration Bill of Materials

|

Item No

|

Qty

|

Type

|

Vendor

|

Model/Name

|

Description

|

|

1

|

10

|

Storage Node

|

Isilon

|

851-0076-01

|

IQ5400S 5.4TB SAS Storage Node

|

|

2

|

1

|

Software License

|

Isilon

|

ONEFS504

|

OneFS 5.0.4 License

|

|

3

|

1

|

Infiniband Switch

|

Isilon

|

850-0033-02

|

24 Port Infiniband Switch

|

Server Software

|

OS Name and Version

|

OneFS 5.0.4

|

|

Other Software

|

None

|

|

Filesystem Software

|

None

|

Server Tuning

|

Name

|

Value

|

Description

|

|

coalescer

|

disabled

|

Disable write coalescing

|

Server Tuning Notes

N/A

Disks and Filesystems

|

Description

|

Number of Disks

|

Usable Size

|

|

450GB SAS 15k RPM Disk Drives

|

120

|

46.9 TB

|

|

Total

|

120

|

46.9 TB

|

|

Number of Filesystems

|

1

|

|

Total Exported Capacity

|

48.0 TB

|

|

Filesystem Type

|

IFS

|

|

Filesystem Creation Options

|

Default

|

|

Filesystem Config

|

9+1 Parity Protected

|

|

Fileset Size

|

5500.8 GB

|

Writes are striped across all 10 storage nodes with +1 parity protection to protect against either a single disk failure, or a single storage node failure.

Network Configuration

|

Item No

|

Network Type

|

Number of Ports Used

|

Notes

|

|

1

|

Jumbo Frame Gigabit Ethernet

|

20

|

Integrated 10/100/1000 Ethernet controller

|

Network Configuration Notes

two gigabit ethernet network interfaces were configured on each storage node. The interfaces were configured to use jumbo frames (MTU size of 9000 bytes). All network interfaces were connected to a Force10 E1200 switch, which provided connectivity to the clients.

Benchmark Network

All switch ports were configured to allow Jumbo Frames (9000 MTU)

Processing Elements

|

Item No

|

Qty

|

Type

|

Description

|

Processing Function

|

|

1

|

20

|

CPU

|

Intel L5410, Quad-Core Processor, 2.33 GHz

|

Network, NFS, Filesystem, Device Drivers

|

Processing Element Notes

Each storage node has 2 physical processors with 4 processing cores

Memory

|

Description

|

Size in GB

|

Number of Instances

|

Total GB

|

Nonvolatile

|

|

Storage Node System Memory

|

16

|

10

|

160

|

V

|

|

Storage Node Integrated NVRAM module

|

0.5

|

10

|

5

|

NV

|

|

Grand Total Memory Gigabytes

|

|

|

165

|

|

Memory Notes

Each storage controller has main memory that is used for the operating system and for caching filesystem data. A separate, integrated battery-backed RAM module is used to provide stable storage for writes that have not yet been written to disk.

Stable Storage

Each storage node is equipped with an nvram journal that stores writes to the local disks; the nvram is protected by 2 batteries. In the event of double battery failure the node will no longer write to the local disks, but continues to write to the remaining storage nodes.

System Under Test Configuration Notes

The system under test consisted of 10 IQ5400S storage nodes, 2U each, connected by DDR Infiniband. Each storage node provides 4 gigabit ethernet interfaces. for the purpose of this test, two of these interfaces were configured and connected to a gigabit ethernet switch.

Other System Notes

Test Environment Bill of Materials

|

Item No

|

Qty

|

Vendor

|

Model/Name

|

Description

|

|

1

|

20

|

SuperMicro

|

SuperServer X5DPA

|

1U Linux client with 1GB RAM

|

|

2

|

1

|

Force10

|

E1200

|

Force10 E1200 Blade Chassis with 4 Gigabit ethernet blades

|

Load Generators

|

LG Type Name

|

LG1

|

|

BOM Item #

|

1

|

|

Processor Name

|

Intel Xeon

|

|

Processor Speed

|

2.4 GHz

|

|

Number of Processors (chips)

|

2

|

|

Number of Cores/Chip

|

2

|

|

Memory Size

|

1 GB

|

|

Operating System

|

CentOS release 4.5 kernel 2.6.9-55.0.2.ELsmp

|

|

Network Type

|

1x Intel 82541EI Onboard gigabit ethernet

|

Load Generator (LG) Configuration

Benchmark Parameters

|

Network Attached Storage Type

|

NFS V3

|

|

Number of Load Generators

|

20

|

|

Number of Processes per LG

|

10

|

|

Biod Max Read Setting

|

2

|

|

Biod Max Write Setting

|

2

|

|

Block Size

|

AUTO

|

Testbed Configuration

|

LG No

|

LG Type

|

Network

|

Target Filesystems

|

Notes

|

|

1..20

|

LG1

|

1

|

/ifs/data

|

|

Load Generator Configuration Notes

all clients were connected to a single filesystem through all storage nodes

Uniform Access Rule Compliance

Each load-generating client hosted 10 processes. The assignment of processes to network interfaces was done such that they were evenly divided across all network paths to the storage controllers. The filesystem data was striped evenly across all disks and storage nodes

Other Notes

Config Diagrams

Generated on Tue Jul 14 17:29:33 2009 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 14-Jul-2009